Abstract

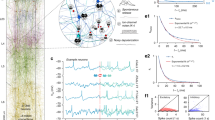

Sensory cortices display a suite of ubiquitous dynamical features, such as ongoing noise variability, transient overshoots and oscillations, that have so far escaped a common, principled theoretical account. We developed a unifying model for these phenomena by training a recurrent excitatory–inhibitory neural circuit model of a visual cortical hypercolumn to perform sampling-based probabilistic inference. The optimized network displayed several key biological properties, including divisive normalization and stimulus-modulated noise variability, inhibition-dominated transients at stimulus onset and strong gamma oscillations. These dynamical features had distinct functional roles in speeding up inferences and made predictions that we confirmed in novel analyses of recordings from awake monkeys. Our results suggest that the basic motifs of cortical dynamics emerge as a consequence of the efficient implementation of the same computational function—fast sampling-based inference—and predict further properties of these motifs that can be tested in future experiments.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All experimental data reported here have been collected by others and previously published. The experimental data in Fig. 5a,b reproduced analyses from ref. 6 of data from ref. 23 (data released in the repository of ref. 57). The experimental data in Fig. 5c,d were captured directly from the plots in the original papers2,3,4. The experimental data in Fig. 7 are a novel analysis of the data from ref. 23, with permission from the authors. The code and parameters used to generate the data corresponding to numerical experiments in the paper have been made publicly available.

Code availability

The (Python) code and parameters for the numerical experiments are available at https://bitbucket.org/RSE_1987/ssn_inference_numerical_experiments. The (OCaml) code for the optimization procedure can be found at https://bitbucket.org/RSE_1987/ssn_inference_optimizer.

References

Churchland, M. et al. Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nat. Neurosci. 13, 369–378 (2010).

Haider, B., Häusser, M. & Carandini, M. Inhibition dominates sensory responses in the awake cortex. Nature 493, 97–100 (2013).

Ray, S. & Maunsell, J. H. Differences in gamma frequencies across visual cortex restrict their possible use in computation. Neuron 67, 885–896 (2010).

Roberts, M. et al. Robust gamma coherence between macaque V1 and V2 by dynamic frequency matching. Neuron 78, 523–536 (2013).

Orbán, G., Berkes, P., Fiser, J. & Lengyel, M. Neural variability and sampling-based probabilistic representations in the visual cortex. Neuron 92, 530–543 (2016).

Hennequin, G., Ahmadian, Y., Rubin, D., Lengyel, M. & Miller, K. The dynamical regime of sensory cortex: stable dynamics around a single stimulus-tuned attractor account for patterns of noise variability. Neuron 98, 846–860 (2018).

Buzsáki, G. & Wang, X. Mechanisms of gamma oscillations. Annu. Rev. Neurosci. 5, 203–225 (2012).

Gray, C., König, P., Engel, A. & Singer, W. Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338, 334–337 (1989).

Akam, T. & Kullmann, D. Oscillations and filtering networks support flexible routing of information. Neuron 67, 308–320 (2010).

Masquelier, T., Hugues, E., Deco, G. & Thorpe, S. Oscillations, phase-of-firing coding, and spike timing-dependent plasticity: an efficient learning scheme. J. Neurosci. 29, 13484–13493 (2009).

Bastos, A. et al. Canonical microcircuits for predictive coding. Neuron 76, 695–711 (2012).

Ma, W., Beck, J., Latham, P. & Pouget, A. Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438 (2006).

Berkes, P., Orbán, G., Lengyel, M. & Fiser, J. Spontaneous cortical activity reveals hallmarks of an optimal internal model of the environment. Science 331, 83–87 (2011).

Shadlen, M. & Movshon, J. Synchrony unbound: a critical evaluation of the temporal binding hypothesis. Neuron 24, 67–77 (1999).

Thiele, A. & Stoner, G. Neuronal synchrony does not correlate with motion coherence in cortical area MT. Nature 421, 366–370 (2003).

Tiesinga, P. & Sejnowski, T. Cortical enlightenment: are attentional gamma oscillations driven by ING or PING? Neuron 63, 727–732 (2009).

Knill, D. & Richards, W. Perception as Bayesian Inference (Cambridge Univ. Press, 1996).

Fiser, J., Berkes, P., Orbán, G. & Lengyel, M. Statistically optimal perception and learning: from behavior to neural representations. Trends Cogn. Sci. 14, 119–130 (2010).

Deneve, S., Latham, P. & Pouget, A. Efficient computation and cue integration with noisy population codes. Nat. Neurosci. 4, 826–831 (2001).

Haefner, R., Berkes, P. & Fiser, J. Perceptual decision-making as probabilistic inference by neural sampling. Neuron 90, 649–660 (2016).

Bányai, M. et al. Stimulus complexity shapes response correlations in primary visual cortex. Proc. Natl Acad. Sci. USA 116, 2723–2732 (2019).

Sohl-Dickstein, J., Mudigonda, M. & DeWeese, M. R. Hamiltonian Monte Carlo without detailed balance. in International Conference on Machine Learning (eds Xing, E. P. & Jebarat, T.) 719–726 (2014).

Ecker, A. et al. Decorrelated neuronal firing in cortical microcircuits. Science 327, 584–587 (2010).

Wainwright, M. & Simoncelli, E. Scale mixtures of Gaussians and the statistics of natural images. Adv. Neural Inf. Proc. Syst. 12, 855–861 (2000).

Coen-Cagli, R., Kohn, A. & Schwartz, O. Flexible gating of contextual influences in natural vision. Nat. Neurosci. 18, 1648–1655 (2015).

Schwartz, O., Sejnowski, T. & Dayan, P. Perceptual organization in the tilt illusion. J. Vis. 9, 19 (2009).

Ahmadian, Y., Rubin, D. & Miller, K. Analysis of the stabilized supralinear network. Neural Comput. 25, 1994–2037 (2013).

Priebe, N. & Ferster, D. Inhibition, spike threshold, and stimulus selectivity in primary visual cortex. Neuron 57, 482–497 (2008).

van Vreeswijk, C. & Sompolinsky, H. Chaotic balanced state in a model of cortical circuits. Neural Comput. 10, 1321–1371 (1998).

Hennequin, G., Vogels, T. & Gerstner, W. Optimal control of transient dynamics in balanced networks supports generation of complex movements. Neuron 82, 1394–1406 (2014).

MacKay, D. Information Theory, Inference and Learning Algorithms (Cambridge Univ. Press, 2003).

Murray, J. et al. A hierarchy of intrinsic timescales across primate cortex. Nat. Neurosci. 17, 1661–1663 (2014).

Grabska-Barwinska, A., Beck, J., Pouget, A. & Latham, P. Demixing odors - fast inference in olfaction. Adv. Neural Inf. Proc. Syst. 26, 1968–1976 (2013).

Buesing, L., Bill, J., Nessler, B. & Maass, W. Neural dynamics as sampling: a model for stochastic computation in recurrent networks of spiking neurons. PLoS Comput. Biol. 7, e1002211 (2011).

Savin, C. & Deneve, S. Spatio-temporal representations of uncertainty in spiking neural networks. Adv. Neural Inf. Proc. Syst. 27, 2024–2032 (2014).

Hennequin, G., Aitchison, L. & Lengyel, M. Fast sampling-based inference in balanced neuronal networks. Adv. Neural Inf. Proc. Syst. 27, 2240–2248 (2014).

Okun, M. & Lampl, I. Instantaneous correlation of excitation and inhibition during ongoing and sensory-evoked activities. Nat. Neurosci. 11, 535–537 (2008).

Carandini, M. & Heeger, D. Normalization as a canonical neural computation. Nat. Rev. Neurosci. 13, 51–62 (2012).

Echeveste, R., Hennequin, G. & Lengyel, M. Asymptotic scaling properties of the posterior mean and variance in the Gaussian scale mixture model. Preprint at https://arxiv.org/abs/1706.00925 (2017).

Yamins, D. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl Acad. Sci. USA 111, 8619–8624 (2014).

Festa, D., Hennequin, G. & Lengyel, M. Analog memories in a balanced rate-based network of EI neurons. Adv. Neural Inf. Proc. Syst. 27, 2231–2239 (2014).

Song, H., Yang, G. & Wang, X. Training excitatory–inhibitory recurrent neural networks for cognitive tasks: a simple and flexible framework. PLoS Comput. Biol. 12, e1004792 (2016).

Orhan, A. & Ma, W. Efficient probabilistic inference in generic neural networks trained with non-probabilistic feedback. Nat. Commun. 8, 138 (2017).

Remington, E., Narain, D., Hosseini, E. & Jazayeri, M. Flexible sensorimotor computations through rapid reconfiguration of cortical dynamics. Neuron 98, 1005–1019 (2018).

Mante, V., Sussillo, D., Shenoy, K. & Newsome, W. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84 (2013).

Aitchison, L. & Lengyel, M. The Hamiltonian brain: efficient probabilistic inference with excitatory–inhibitory neural circuit dynamics. PLoS Comput. Biol. 12, e1005186 (2016).

Rao, R. & Ballard, D. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 2, 79–87 (1999).

Keller, G. & Mrsic-Flogel, T. Predictive processing: a canonical cortical computation. Neuron 100, 424–435 (2018).

Vinck, M. & Bosman, C. More gamma more predictions: gamma-synchronization as a key mechanism for efficient integration of classical receptive field inputs with surround predictions. Front. Syst. Neurosci. 10, 35 (2016).

Roelfsema, P., Lamme, V. & Spekreijse, H. Synchrony and covariation of firing rates in the primary visual cortex during contour grouping. Nat. Neurosci. 7, 982–991 (2004).

Dayan, P. & Abbott, L. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (MIT Press, 2001).

Hennequin, G. & Lengyel, M. Characterizing variability in nonlinear recurrent neuronal networks. Preprint at https://arxiv.org/abs/1610.03110 (2016).

Werbos, P. Backpropagation through time: what it does and how to do it. Proc. IEEE 78, 1550–1560 (1990).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Zhu, C., Byrd, R., Lu, P. & Nocedal, J. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Software 23, 550–560 (1997).

Williams, C. & Rasmussen, C. Gaussian Processes for Machine Learning Vol. 2 (The MIT Press, 2006).

Ecker, A. et al. State dependence of noise correlations in macaque primary visual cortex. Neuron 82, 235–248 (2014).

Acknowledgements

This work was supported by the Wellcome Trust (New Investigator Award 095621/Z/11/Z and Investigator Award in Science 212262/Z/18/Z to M.L., and Seed Award 202111/Z/16/Z to G.H.), and the Human Frontiers Science Programme (research grant RGP0044/2018 to M.L.). We are grateful to A. Ecker, P. Berens, M. Bethge and A. Tolias for making their data publicly available; to G. Orbán, A. Bernacchia, R. Haefner and Y. Ahmadian for useful discussions; and to J. P. Stroud for detailed comments on the manuscript.

Author information

Authors and Affiliations

Contributions

R.E., G.H. and M.L. designed the study. R.E. and G.H. developed the optimization approach. R.E. ran all numerical simulations. R.E. and G.H. analyzed the experimental data, and all authors performed analytical derivations. R.E., G.H. and M.L. interpreted results and wrote the paper, with comments from L.A.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Neuroscience thanks Jeff Beck, Jeffrey Erlich, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 GSM and network parameters.

a, Filters: projective fields of the GSM and receptive fields of the network. Each filter image shows the projective field of a latent variable (columns of A; Eq. (1)), which was the same as the receptive field of the corresponding E-I cell pair in the network (rows of \({{\bf{W}}}^{\text{ff}}={\left[{\bf{A}}\ {\bf{A}}\right]}^{{\sf{T}}}/15\); Eq. (14); cf. Fig. 1a,c). b, Prior covariance in the GSM (C in Eq. (2)). c, Sample stimuli generated by the GSM, also used for testing the network’s generalization in Fig. 3b-c. d-e, Parameters of the optimized network. d, Recurrent weights (top: raw weights; middle: normalized absolute values) and process noise covariance (bottom) after training. Weights and covariances are shown for only one row (of each quadrant) of W (Eq. (8)) and \({{\boldsymbol{\Sigma }}}^{\eta }\) (Eq. (11)), respectively, as they are circulant. Thus, each line shows the weights connecting, or the covariance between, cells of different types (see legend) as a function of the difference in their preferred stimuli. As the figure shows, the connectivity profile of either E or I cells in the optimized network was largely independent of whether the postsynaptic cell was excitatory or inhibitory (top). Overall, recurrent E and I connections had similar tuning widths, with E connections being slightly more broadly tuned than I ones (middle). Nevertheless, the net E input to any one cell in the network was still more narrowly tuned than the net I input, due to the responses of presynaptic E cells being more narrowly tuned than those of I cells (not shown). The optimized network also retained a substantial amount of process noise that was larger in E than in I cells, and highly correlated both between the E and I cell of a pair and between cells with different tuning (up to a ~30∘ tuning difference; bottom). e, Input nonlinearity (Eq. (14)), converting feedforward receptive field activations \({({{\bf{W}}}^{\text{ff}}{\bf{x}})}_{i}\) into network inputs hi (black). For comparison, the distribution of inputs across all cells for the training set is presented in gray. As the figure shows, the optimized input transformation, capturing the nonlinear effects of upstream preprocessing of visual stimuli, had a threshold that was just below the distribution of receptive field outputs, ensuring that all stimulus-related information was transmitted in the input signal, and an exponent close to two, remarkably similar to that used by the cells of the network (cf. Eq. (9) and Supplementary Table 1).

Extended Data Fig. 2 Divisive normalization and the mechanism underlying oscillations in the optimized network.

a-b, Divisive normalization, or sublinear summation of neural responses, has been proposed as a canonical computation in cortical circuits38. In turn, the stabilized supralinear network (SSN), which formed the substrate of our optimized network, has been proposed to provide the dynamical mechanism underlying divisive normalization27. We thus wondered whether our optimized network also exhibited it. a, In accordance with divisive normalization, the network’s response to the sum of two stimuli (solid purple) was smaller than the sum of its responses to the individual stimuli (solid red/blue), and lay between the average (dotted purple) and the sum (dashed purple). Inset shows stimuli used in this example. b, Generic divisive normalization in the optimized network. We fitted a standard phenomenological model of divisive normalization (adapted from Ref. 38) to the (across-trial) mean firing-rate responses of E cells in the optimized network, 〈rE〉, as a function of the feedforward input h to the network (that is without regard to its recurrent dynamics; Eq. (14)): \({\langle r\rangle }_{i}^{(\beta )}={b}_{2}+\left({h}_{i}^{(\beta )}+{b}_{1}\right){\left[{({\bf{M}}\ {{\bf{h}}}^{(\beta )})}_{i}+{s}^{2}\right]}^{-1}\), where \({h}_{i}^{(\beta )}\) and \({\langle r\rangle }_{i}^{(\beta )}\) were the feedforward input and the average firing rate of cell i in response to stimulus β, respectively, and b1, b2 and s were constant parameters. The parameter matrix M was responsible for normalization, by dividing the input hi by a mixture of competing inputs to other neurons. M was parameterized as a symmetric circulant matrix to respect the rotational symmetry of the trained network (Extended Data Fig. 1). In total, our model of divisive normalization had \(3+\left({N}_{{\rm{E}}}/2\right)+1=29\) free parameters. Model fitting was performed via minimization of the average squared difference between network and model rates, plus an elastic energy regularizer for neighbouring elements of M. Shown here is a scatter plot of neural responses to a set of 500 random stimuli (generated as the generalization dataset, Methods), predicted by the phenomenological model vs. produced by the actual network. Each dot corresponds to a stimulus-neuron pair. Inset shows three representative average response profiles across the network (dots) and the phenomenological model’s fit (lines). Note the near perfect overlap between the network and the phenomenological model in all three cases. The divisive normalization model also outperformed both a linear model and a model of subtractive inhibition (not shown). These results show comprehensively that, in line with empirical data, our trained network performed divisive normalization of its inputs under general conditions. c-d, Using voltage-clamp to study the mechanisms underlying oscillations in the optimized network. To determine whether oscillations in our network resulted from the interaction of E and I cells, or whether they arose within either of these populations alone, we conducted two simulated experiments. In each simulation, either of the two populations (E or I) was voltage-clamped to its (temporal) mean, as calculated from the original network, for each input in the training set. Thus, recurrent input from the clamped population was effectively held constant to its normal mean, but did not react to changes in the other population. As expected for a network in the inhibition-stabilized regime, clamping of the I cells resulted in unstable runaway dynamics, precluding further analysis of oscillations (not shown). c, Illustration of the E-clamping experiment in the optimized network (cf. Fig. 1b). For each stimulus, each E cell’s voltage was clamped to its mean voltage, μE, obtained when the network was presented with the same stimulus without voltage clamp. d, LFP power spectra in the network after voltage-clamping of the E population at different contrast levels (colors as in Fig. 2). The network remained stable, but the peak in its LFP power spectrum characteristic of gamma oscillations was no longer present (cf. Fig. 5c, inset). This shows that gamma oscillations in the original network required interactions between E and I cells (that is they were generated by the so-called ‘PING’ mechanism16).

Extended Data Fig. 3 Random networks.

The parameterization of our network was highly constrained (for example ring topology with circulant and symmetric weight and process noise covariance structure, 1:1 E:I ratio, fixed receptive fields). To test whether these constraints alone, without any optimization, were sufficient to generate the results we obtained in the optimized network, we sampled weight matrices at random, by drawing each of the 8 hyperparameters of the weight matrix (Eq. (10)) from an exponential distribution truncated between 0.1 and 10 times the values originally found by optimization. We discarded matrices that were either unstable or converged to a trivial solution (all mean rates equal 0). Less than 20% of the generated matrices satisfied these criteria, further confirming that optimization was non-trivial. Results for six such example random networks are shown (columns). a, Recurrent weights as a function of the difference in the preferred stimuli of two cells (cf. Extended Data Fig. 1d, top). Different lines are for weights connecting cells of different types (legend). b-c, Mean (b) and standard deviation of membrane potential responses (c), averaged over the population, as a function of contrast (cf. Fig. 3a). Gray dots on x-axis indicate training contrast levels. Note the wide range of behaviors displayed by these networks. For example, the standard deviation of responses could go up, down, or even be non-monotonic with contrast, while the range of mean rates also varied wildly. d-e, Mean firing rate (d) and Fano factor (e) of neurons as a function of stimulus orientation (relative to their preferred orientation) during spontaneous (dark red) and evoked activity (light orange; cf. Fig. 5a-b). The peak mean rate of example 3 exceeded 50 Hz and is thus shown as clipped in this figure. Note that mean rate tuning curves (during evoked activity) were very narrow for most networks (all but example 5), resulting in 0 Hz rates and thus undefined Fano factors for stimuli further away from the preferred orientation. f, LFP power spectra at different contrast levels (colors as in Fig. 2). Note the absence of gamma peaks (cf. Fig. 5c, inset). g, Average rate response around stimulus onset at different contrast levels (colors as in Fig. 2). Black bars show stimulus period. Note the absence of transients (cf. Fig. 5d). To estimate network moments in b-d, n = 20,000 independent samples (taken 200 ms apart) were used. Population averages (n=50 cells) were computed for b and c. Mean firing rates in panel g were computed over n = 100 trials.

Extended Data Fig. 4 Comparison of random and optimized networks: cost achieved and dynamical features.

Networks are ranked in all panels in order of decreasing total cost achieved by them (shown in a). Random networks are those shown in Extended Data Fig. 3. The originally optimized network presented in the main text is indicated with ⋆, and the network optimized without enforcing Dale’s principle (Extended Data Fig. 8) is marked with †. Other optimized networks were studied to confirm that well-optimized networks reliably showed similar behavior. This was important because our cost function was highly non-convex. Therefore, any minimum our optimizer found, such as that corresponding to the originally optimized network, had no guarantee of being the global minimum. Therefore, we trained 10 further networks on the original cost function (Methods), starting from random initial conditions, and show here those whose final cost was at least approximately as low as that of the original network (9 out of 10). a, Total cost (Eq. (25)) computed for each of the random networks and (left) for networks that were optimized for the original cost (right). Colors indicate different components of the cost function (legend, see Eqs. (26)–(29) for mathematical definitions). The inset shows the optimized networks only (note different y-scale). Note that the cost achieved by the random networks was 1-3 orders of magnitude higher than that achieved by the optimized networks. Furthermore, none of the optimized networks achieved substantially lower costs than the one we presented in the main text. b-c, Oscillatoriness (b) and transient overshoot size (c) for each network in a. Oscillatoriness was computed by numerical fits of Eq. (33) to the autocorrelogram of the LFP generated by the network (Methods). Transients in population-average firing rates were quantified as the size of the overshoot normalized by the change in the steady state mean (see also Fig. 7a). Note that compared to the optimized networks – including the one presented in the main text –, oscillations and transients were almost entirely absent from random networks. Furthermore, all optimized networks had substantial oscillatoriness and transient overshoots (Extended Data Fig. 4b-c). This suggests that the results we obtained for the originally optimized network were representative of the best achievable minima of the cost function.

Extended Data Fig. 5 Control networks without full variability modulation.

Variability modulations are a hallmark of sampling-based inference5. To see whether they were also critical for our results, we optimized networks with modified cost functions, either setting both ϵvar = 0 and ϵcov = 0 (Eq. (25)), requiring only response means to be matched (a-g; see also Fig. 5, right column), or setting only ϵcov = 0, requiring the matching of response means and variances but not of covariances (h-n; see also Methods). a-b, h-i, Network parameters as in Extended Data Fig. 1d-e. Both networks developed weak connection weights (a and h, top), with near-identical widths for E and I inputs onto both E and I cells (a and h, middle), and an almost linear input transformation (b and i). c,j, Sample population activity as in Fig. 2a. d-e,k-l, Matching moments between the ideal observer and the network for training stimuli as in Fig. 2c-d. Extremely weak coupling in the first network (a, top) meant an essentially feed-forward architecture. Thus, its response covariance simply reflected its process noise covariance (compare e and a, bottom). High input correlations in the second network (h, bottom) resulted in a single, global mode of output fluctuations (l). f-g,m-n, Generalization to test stimuli as in Fig. 3a-b. Insets in g show GSM posterior and network response means for example test stimuli as in Fig. 3c. Response moments in n are shown only for training stimuli, not for test stimuli, but by distinguishing variances (blue, bottom) and covariances (lavender, bottom). Both networks completely failed to fit moments that they were not explicitly required to match. Thus, firing rate tuning curves were preserved in both networks, but Fano factors were barely modulated in the first network (Extended Data Fig. 6a-b). Critically, neither of these networks showed discernible oscillations or transient overshoots (Extended Data Fig. 6c-e). Response moments in d-g and k-n were estimated from n = 20,000 independent samples (taken 200 ms apart). Population mean moments in f and m were further averaged across n=50 E cells. Correlations in e and l are Pearson’s correlations.

Extended Data Fig. 6 Comparison of neural dynamics between the originally optimized network and the control networks.

a-e, Dynamics of optimized networks as in Fig. 5a-e. The originally optimized network of the main paper (left) is compared to various control networks, from left to right: without slowness penalty (Extended Data Fig. 10), without covariance modulation (matching means and variances; Extended Data Fig. 5h-n), without covariance and variance modulation (matching means only; Extended Data Fig. 5a-g), enforcing constant Fano factors (Extended Data Fig. 7), and with Dale’s principle not enforced (Extended Data Fig. 8). For ease of comparison, only stimulus-dependent power spectra are shown for the optimized network of the main paper, without showing the dependence of gamma peak frequency on contrast (cf. Fig. 5c, middle), as most control networks had no discernible gamma peaks. For more details on control networks, see the captions of the corresponding figures (Extended Data Figs. 5,7,8 and 10). Response moments in a were estimated from n = 20,000 independent samples (taken 200 ms apart). Mean firing rates in d were computed over n = 100 trials. Panel e shows mean ± s.e.m. (n = 20 trials).

Extended Data Fig. 7 Control network: enforcing constant Fano factors.

Fano factors need to be specifically stimulus-independent for a class of models, (linear) probabilistic population codes (PPCs12), that provide a conceptually very different link between neural variability and the representation of uncertainty from that provided by sampling5,18, which we pursue here. Therefore, we used our optimization-based approach to directly compare the circuit dynamics required by PPCs to those of our originally optimized network implementing sampling. For this, we trained a further control network whose goal was to match the mean modulation of the control network (resulting in realistic tuning curves), while keeping Fano factors constant. We achieved this by devising a set of target covariances that would result (together with the target mean responses used by all other networks) in constant Fano factors – assuming Poisson spiking and an exponentially decaying autocorrelation function (using analytic results in Ref. 52). Training then proceeded exactly as for the other networks, with the same ϵ parameters in the cost function as for the originally optimized network, only employing the new covariance targets. a-b, Network parameters as in Extended Data Fig. 1d-e. The network made use of strong inhibitory connections (a, top), large shared process noise (a, bottom), and strongly modulated inputs (b). c, Sample population activity as in Fig. 2a. d-e, Matching moments between the ideal observer and the network for training stimuli as in Fig. 2c-d. f-g, Generalization to test stimuli as in Fig. 3a-b. Insets in g show GSM posterior and network response means for example test stimuli as in Fig. 3c. The network was able to match mean responses in the training set and to generalize to novel stimuli (d, f-g), while keeping Fano factors relatively constant as required (Extended Data Fig. 6b). For consistency with previous results, we obtained Fano factors by numerically simulating the same type of inhomogeneous Gamma process as in the other networks (Methods), thus violating the Poisson spiking assumptions under which we computed the target covariances of the network (see above) – hence the remaining small modulations of Fano factors. Critically, although the training procedure was identical to that used for the original network, only differing in the required variability modulation provided by the targets (see above), this control network displayed no gamma-band oscillations (Extended Data Fig. 6c). Inhibition-dominated transients did emerge, but were weaker than in the original network (Extended Data Fig. 6d,e). Response moments in d-g were estimated from n = 20,000 independent samples (taken 200 ms apart). Population mean moments in f were further averaged across n=50 E cells. Correlations in e are Pearson’s correlations.

Extended Data Fig. 8 Control network: Dale’s principle not enforced.

In order to see how much the biological constraints we used for the optimized network, and in particular enforcing Dale’s principle, were necessary to achieve the performance and dynamical behavior of the original network, we optimized a network with the same cost function as for the original network (Eq. (25)) but without enforcing Dale’s principle. This meant that the signs of synaptic weights in each quadrant of the weight matrix (the aXY coefficients in Eq. (10)) were not constrained. Otherwise, optimization proceeded in the same way as before (Methods). The training of this network proved to be much more difficult and prone to result in unstable networks, which we avoided by early stopping. a-b, Network parameters as in Extended Data Fig. 1d-e. As Dale’s principle was not enforced, only notional cell types can be shown (legend). Nevertheless, interestingly, the network still obeyed Dale’s principle after optimization (top): all outgoing synapses of any one cell had the same sign. Note that the outgoing weights of the cells whose moments were constrained (Ẽ cells, whose activity is shown and analysed in c-g) were actually negative. Therefore, in effect, these cells became inhibitory during training. c, Sample population activity as in Fig. 2a. d-e, Matching moments between the ideal observer and the network for training stimuli as in Fig. 2c-d. f, Generalization to test stimuli as in Fig. 3a. g, Matching moments between the ideal observer and the network for training stimuli as in Fig. 3b (lavender). Note that here, unlike in Fig. 3, response moments are shown only for training stimuli, not for test stimuli, but by distinguishing variances (blue, bottom) and covariances (lavender, bottom). Overall, the stationary behavior of this network was broadly similar to that of the originally optimized network (cf. Figs. 2 and 3). Therefore, it achieved a performance that was far better than the random networks’ (Extended Data Fig. 4a). However, it still performed substantially worse than networks optimized with Dale’s principle enforced (Extended Data Fig. 4a), and its dynamics were also qualitatively different: oscillations and transient overshoots were largely absent from it (Extended Data Fig. 4b-c, Extended Data Fig. 6). Response moments in d-g were estimated from n = 20,000 independent samples (taken 200 ms apart). Population mean moments in f were further averaged across n=50 Ẽ cells. Correlations in e are Pearson’s correlations.

Extended Data Fig. 9 Further analyses of the role of transients in supporting continual inference.

a-b, Analysis of transients in the response of a single neuron. (Left panel in a is reproduced from Fig. 7a.) a, Temporal evolution of the mean (left) membrane potential (uE), and the membrane potential standard deviation (right) in three different neural responses (thick lines) with identical autocorrelations (matched to neural autocorrelations in the full network, inset, cf. Fig. 4a) but different time-dependent means (left) and standard deviations (right). Thin green line shows the time-varying target mean (left) and standard deviation (right). b, Total divergence (Eq. (37), Methods) between the target distribution at a given point in time and the distribution represented by the neural activity sampled in the preceding 100 ms, for each of the three responses (colors as in a). In comparison, note that Fig. 7b only shows the mean-dependent term of the divergence (Eq. (38), Methods). Black bars in a-b show stimulus period. Mean and divergence computed as an average over multiple trials (n =10,000). c, Optimal response trajectories (red lines) for continual estimation of the mean of a target distribution (thin green lines). Different shades of red indicate optimal trajectories corresponding to three different target levels (5, 10, and 20 mV, emulating different contrast levels). We optimized neural response trajectories (Supplementary Eq. 39, Supplementary Math Note) so that the distance between their temporal average, computed using a prospective box-car filter (k(t) = 1/T for t ∈ [0, T] and 0 otherwise, with T = 20 ms), and the corresponding Heaviside step target signal would be minimized under a smoothness constraint (ϵsmooth = 1; Supplementary Eq. 37, Supplementary Math Note). Note the transient overshoot in the optimal response very closely resembling those observed in the optimized network (Figs. 5d, 7c-d): its magnitude scales with the value of the target mean, and it is followed by damped oscillations. Similar results (with less ringing following the overshoot) were obtained also for an exponentially decaying, ‘leaky’ kernel (not shown). d-e, Relationship between overshoot magnitude and steady state difference: analysis of experimental recordings from awake macaque V123. d, Overshoot magnitude versus steady state difference: same as Fig. 7e, but restricting the analysis to steady state differences below 60 Hz (to exclude outliers). Red line shows linear regression (± 95% confidence bands). The correlation between overshoot size and steady state difference is still significant: two-sided Wald test p = 1 × 10−89 (n = 1263 cell-stimulus pairs R2 ≃ 0.27). e, Systematically changing the maximal steady state difference (x-axis) used for restricting the analysis of the correlation between overshoot size and steady state difference (d and Fig. 7e) reveals that the correlation is robust (black line ± 95% confidence intervals) and remains highly significant (blue line, showing corresponding p-values; note logarithmic scale) for all but the smallest threshold (and thus smallest sample size). Horizontal dotted lines show R = 0 correlation (black) and p = 0.05 significance level (blue) for reference. As the maximal steady state difference increases, the number of points n considered also increases from n = 4 to n = 1279. Correlations in a are Pearson’s correlations. Pearson’s R values and corresponding p-values in e were obtained by linear regression performed as in d.

Extended Data Fig. 10 Control network: no explicit slowness penalty.

To test whether the explicit slowness penalty in our cost function (Eq. (29)) was necessary for obtaining the dynamical behaviour exhibited by the optimized network of the main text, we set ϵslow = 0 in Eq. (25). a-b, Network parameters as in Extended Data Fig. 1d-e. c, Sample population activity as in Fig. 2a. d-e, Matching moments between the ideal observer and the network for training stimuli as in Fig. 2c-d. f, Generalization to test stimuli as in Fig. 3a. g, Matching moments between the ideal observer and the network for training stimuli as in Fig. 3b (lavender). Note that here, unlike in Fig. 3, response moments are shown only for training stimuli, not for test stimuli, but by distinguishing variances (blue, bottom) and covariances (lavender, bottom). Note that this network behaved largely identically to the originally optimized network (see also Extended Data Fig. 6). This could be attributed to the fact that our optimization implicitly encouraged fast sampling by default, simply by using a finite averaging window for computing average moments of network responses, and in particular by including samples immediately or shortly following stimulus onset (Methods). Response moments in d-g were estimated from n = 20,000 independent samples (taken 200 ms apart). Population mean moments in f were further averaged across n=50 E cells. Correlations in e are Pearson’s correlations.

Supplementary information

Supplementary Information

Supplementary Math Note, Supplementary Figs. 1 and 2 and Supplementary Table 1.

Rights and permissions

About this article

Cite this article

Echeveste, R., Aitchison, L., Hennequin, G. et al. Cortical-like dynamics in recurrent circuits optimized for sampling-based probabilistic inference. Nat Neurosci 23, 1138–1149 (2020). https://doi.org/10.1038/s41593-020-0671-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-020-0671-1

This article is cited by

-

Response sub-additivity and variability quenching in visual cortex

Nature Reviews Neuroscience (2024)

-

Studying the neural representations of uncertainty

Nature Neuroscience (2023)

-

Sampling-based Bayesian inference in recurrent circuits of stochastic spiking neurons

Nature Communications (2023)

-

Long- and short-term history effects in a spiking network model of statistical learning

Scientific Reports (2023)

-

Bayesian encoding and decoding as distinct perspectives on neural coding

Nature Neuroscience (2023)