Abstract

This paper performs a comprehensive study on the deep-learning-based computer-aided diagnosis (CADx) for the differential diagnosis of benign and malignant nodules/lesions by avoiding the potential errors caused by inaccurate image processing results (e.g., boundary segmentation), as well as the classification bias resulting from a less robust feature set, as involved in most conventional CADx algorithms. Specifically, the stacked denoising auto-encoder (SDAE) is exploited on the two CADx applications for the differentiation of breast ultrasound lesions and lung CT nodules. The SDAE architecture is well equipped with the automatic feature exploration mechanism and noise tolerance advantage, and hence may be suitable to deal with the intrinsically noisy property of medical image data from various imaging modalities. To show the outperformance of SDAE-based CADx over the conventional scheme, two latest conventional CADx algorithms are implemented for comparison. 10 times of 10-fold cross-validations are conducted to illustrate the efficacy of the SDAE-based CADx algorithm. The experimental results show the significant performance boost by the SDAE-based CADx algorithm over the two conventional methods, suggesting that deep learning techniques can potentially change the design paradigm of the CADx systems without the need of explicit design and selection of problem-oriented features.

Similar content being viewed by others

Introduction

Computer-aided diagnosis (CADx) is a computerized procedure to provide a second objective opinion for the assistance of medical image interpretation and diagnosis1,2,3,4,5,6,7,8,9,10. One of the major CADx applications is the differentiation of malignancy/benignancy for tumors/lesions3,4,11,12,13,14,15. Several studies have suggested that the incorporation of the CADx system into the diagnostic process can improve the performance of image diagnosis by decreasing inter-observer variation16,17 and providing the quantitative support for the clinical decision like biopsy recommendations5, etc. Specifically, the CADx systems were shown to be effective to assist the diagnostic workup for the reduction of unnecessary false-positive biopsies6 and thoracotomy10.

To achieve malignancy identification, the conventional design of CADx is often composed of three main steps: feature extraction4,6,7,12,13,14,15,18, feature selection18,19,20,21, and classification. These three steps need to be well-addressed separately and then integrated together for the overall CADx performance tuning. In most previous works, engineering on effective feature extraction step for each specific problem was regarded as the one of the most important issues4,6,7,12,13,14,15,18. Extraction of discriminative features could potentially ease the latter steps of feature selection and classification. Nevertheless, the engineering of effective features is problem-oriented and still needs assistance from the latter steps of feature selection and feature integration by classifier, to achieve accurate lesion/nodule differentiation. In general, the diagnostic image features can be categorized into morphological and textural features.

The extraction of effective features is per se a complicated task that requires a series of image processing steps. These image processing steps involve 1) image segmentation4,6,14,15,22,23,24 for the morphological feature computing4,6,7,14,15,22, which unfortunately remains quite difficult to address25, and 2) image decomposition12,19 followed with statistical summarizations and presentations12,19,20,26 for the textural feature calculation27. Accordingly, the extraction of useful features highly depends on the quality of each intermediate result in the image processing steps4,6,14,22,27, which often needs many passes of trial-and-error design to find satisfactory intermediate results. Meanwhile, the computerized image segmentation results often require various case-by-case user interventions, e.g., parameter adjustment6,28, manual refinement6,9,28,29,30,31, solution selection4,23, etc., to improve the contour correctness4,6,13,15,23. However, with the user’s intervention on the segmentation results, the differential diagnostic outcome from the CADx system will be biased and the objectiveness will no longer hold. In summary, the design and tuning of the overall performance of the conventional CADx framework tends to be very arduous, as many image processing issues need to be well resolved.

Deep learning for CADx

Recently, the deep learning techniques have been introduced to the medical image analysis domain with promising results on various applications, like the computerized prognosis for Alzheimer’s disease and mild cognitive impairment32, organ segmentations33 and detection34, ultrasound standard plane selection35, etc., on 3D or 4D image data, etc. In the context of CAD, most works focused on the problem of abnormality detection (CADe)36,37,38. For the problem of CADx, a specific convolutional neural network model, OverFeat39, was employed in the work40 to classify the specific type of peri-fissural nodules with the ensemble fashion in AUC performance around 0.86. In this study, we further exploit the deep learning model of the stacked denoising autoencoder (SDAE)41 for the differentiation of distinctive types of lesions and nodules depicted with different imaging modalities.

The deep learning techniques could potentially change the design paradigm of the CADx framework for several advantages over the old conventional frameworks. The advantages can be three-fold. First, deep learning can directly uncover features from the training data, and hence the effort of explicit elaboration on feature extraction can be significantly alleviated. The neuron-crafted features may compensate and even surpass the discriminative power of the conventional feature extraction methods. Second, feature interaction and hierarchy can be exploited jointly within the intrinsic deep architecture of a neural network. Consequently, the feature selection process will be significantly simplified. Third, the three steps of feature extraction, selection and supervised classification can be realized within the optimization of the same deep architecture. With such a design, the performance can be tuned more easily in a systematic fashion.

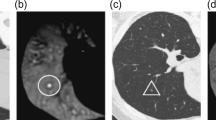

The SDAE is a denosing version of the stacked autoencoder (SAE)42. The SAE/SDAE architecture can automatically discover the diverse representative patterns from the data with the intrinsic data reconstruction mechanism. Accordingly, the SAE/SDAE architecture can potentially address the issues of high variation in either shape or appearance of lesions/tumors. Meanwhile, with the advantages of automatic feature extraction mechanism and noise tolerance, the SDAE-based CADx scheme can circumvent the potential inaccurate image processing results that could lead to unreliable features in the conventional CADx framework. To show the wide scope of applicability, the SDAE-based CADx model is applied to the differential diagnosis of breast lesions in ultrasound (US) images and pulmonary nodules in CT images. Two CADx methods, i.e., RANK19 and CURVE12 that were specifically developed for the breast and lung applications, respectively, and clinical morphological features (MORPH) are implemented for performance comparison. The MORPH features are computed from either experts’ drawings or the computer-generated boundaries by level set43 (DRLSE) and grow-cut44 (GC) methods. Meanwhile, the effect of feature combination of RANK and CURVE with the MORPH is also explored. The experimental results show that the deep-learning-based CADx can achieve better differentiation performance than the comparison methods across different modalities and diseases. To illustrate the challenges of the two CADx applications, Fig. 1 lists several cases of breast lesions and pulmonary nodules depicted in US and CT images, respectively. The cases demonstrated in Fig. 1 may not be easy to differentiate for a person without a medical background and even for a junior medical doctor.

Experiment Results

Experiment setup

We compare the performances of the SDAE-based CADx and the two compared algorithms with the six assessment metrics: 1) area under receiver operating characteristic curve (AUC), 2) accuracy (ACC), 3) sensitivity (SENS), 4) specificity (SPEC), 5) positive predictive value (PPV), and 6) negative predictive value (NPV). For the robustness to data dependence, we perform 10 times of 10-fold cross validations for the SDAE and other baseline algorithms on both the breast US and lung CT datasets. In each cross validation, the partition of training and testing data is randomly determined. For the illustration of the performance dependency of the conventional CADx frameworks on the specific problems, all baseline algorithms are applied to all datasets.

Two slice selection strategies, i.e., SINGLE and ALL, are implemented for the lung CT CADx problem. For the SINGLE strategy, there are totally 1400 training and testing ROI samples. Each ROI sample stands for a distinctive nodule. In the ALL strategy, member slices of each nodule are involved in the training and testing. Specifically, the member slices of all 1360 training nodules in the 10-fold cross validation scheme are randomly permutated as the training samples. For the 140 testing nodules, the final differentiation of a testing nodule is determined with the majority voting from the member slices. In the ALL strategy, there are 10133 involved slices. Because slice thickness variation is very high (0.6–5 mm), fully 3D image features for the lung CADx may not be suitable.

The distinctiveness of each set of the 10-fold partition is assured in the random selection process. In each 10-fold cross validation, all algorithms share the same sample partition setting on each fold for fair comparison. For both lung and breast cases, we use the same SDAE architecture with 2 hidden layers, each containing 200 and 100 neurons, respectively. The true positive of the lung CT and breast US data is defined as the malignant class.

Results

To illustrate the patterns automatically discovered by SDAE, Fig. 2 lists several patterns of pulmonary nodules in CT images and breast lesions in US images, respectively, at the pre-training step. In the subfigures (a) and (c), the diverse appearance patterns of various nodules/lesions summarized at the first hidden layer are shown. The patterns at the second hidden layers that automatically encode the intertwined and hierarchical relations to the first hidden layer patterns are demonstrated in the subfigures (b) and (d).

Examples of constructed patterns in the first and second hidden layers at the pre-training step: (a,b) patterns of the first and second hidden layers for pulmonary nodules; (c,d) patterns of the first and second hidden layers for breast lesions. SDAE architecture with two hidden layers is used in this study for the differentiation of pulmonary nodules and breast lesions. It is worth noting that the patterns of the second hidden layers are constructed as the weighted sums from all patterns in the first layer. In the reconstruction, the first layer neurons are simply all assumed activated. The neuron activation can be more complicated with the feed-in of real image data. In (b,d) the example patterns enclosed by the yellow rectangles hold the positive weightings to the RN nodule and benignant lesion classes in the supervised training step, whereas the patterns in blue regions are connected to the RM nodule and malignant lesion classes with positive weightings. It can be observed from (b,d) that the second hidden layer patterns appear fuzzier due to the effect of weighted sum. All patterns are normalized for clearer presentation.

Tables 1 and 2 summarize the statistics of six performance metrics for all algorithms on the lung CT and breast US datasets, respectively. To visualize the performance agreement between each pair of the comparing algorithms (SDAE, CURVE, RANK, and MORPH) over all 100 folds, Bland and Altman plots45 of the ACC metrics on the lung and breast datasets are drawn in Figs 3 and 4, respectively. In a Bland and Altman plot, the horizontal axis stands for the mean value of the assessment metrics between the two comparing methods, whereas the difference values of the two methods are coordinated in the vertical axis. The red line in the Bland and Altman plot suggests the mean difference of the assessment values, whereas the top and bottom blue lines delimit the ±1.96 standard deviation lines of the difference values.

As can be observed from Figs 3 and 4 and Tables 1 and 2, the SDAE algorithm performs better than the other three algorithms. For the lung nodule differentiation, the SDAE algorithm at least achieves 0.15 and 0.1 higher ACC values than the CURVE, RANK and MORPH methods with respect to the ALL and SINGLE strategies in average. The CURVE algorithm achieves better than the RANK algorithm with the mean ACC differences for the ALL and SINGLE strategies, respectively. The mean ACC differences between the CURVE and MORPH are near to 0 with both strategies. The mean ACC differences between the RANK and MORPH are all lower than 0, suggesting that the RANK is averagely not able to outperform MORPH. For the breast application, the SDAE can achieve at least higher ACC values 0.04 than the RANK, CURVE, and MORPH in average. The CURVE perform worse than RANK with the mean differences of the ACC values around −0.02, but better than the MORPH with mean differences w.r.t. ACC values around 0.05. The RANK on the other hand can perform the classification of breast lesions better than using the clinical MORPH features with mean differences more than 0.05 on the ACC metrics. To further illustrate the ACC and AUC performance distributions of SDAE, CURVE, RANK and MORPH, the corresponding box-plots on the lung and breast datasets are also shown in Fig. 5. Meanwhile, it can be found from Tables 1 and 2 that the morphological features from the DRLSE and GC methods are not very helpful for the boosting of performance. It is because that satisfactory lesion/nodule boundaries are quite difficult to obtain without user intervention. The discussion about the image segmentation can be found in the second section of the supplementary.

Referring to the Fig. 5, it can be found that performance distributions appear substantially different in most cases, except the pairs “CURVE-MORPH-ALL” and “CURVE-MORPH-SINGLE”. To further investigate the significance of differences for these two pairs, the two sample t-test is applied. The ACC p-values of the pairs “CURVE-MORPH-ALL” and “CURVE-MORPH-SINGLE” are 0.69 and 0.32 respectively, whereas the AUC p-values w.r.t. each pair are 0.0001 and 0.088. It is thus suggested that ACC performance differences are not significant between the methods CURVE and MORPH on both strategy and the AUC performance between CURVE and MORPH is not significantly different on the SINGLE strategy.

Discussion and Conclusion Remarks

The experimental results show that the SDAE algorithm outperforms the conventional CADx algorithms on both applications. Specifically, as can be observed in Table 1, Figs 3 and 5, the SDAE algorithm can differentiate lung nodules significantly better than the two baseline algorithms and the simple clinical MORPH features do in terms of all six assessment metrics. Particularly, it can also be found that the SDAE algorithm can achieve a much better differentiation performance with the ALL strategy. Therefore, the involvement of more member slices as training data can be very helpful to boost the performance of a deep learning CADx scheme, even if some of the training slices only partially depict the nodules of interest. It may be because the inclusion of more nodule member slices as training data can provide richer image contexts for the SDAE model to augment the differentiation capability. For breast lesion classification, the SDAE algorithm still outperforms the two texture-based algorithms as shown in Table 2, Figs 4 and 5. It is also worth noting that the data size of lung nodules is almost three times of the breast data size.

Referring to Tables 1 and 2, the CURVE and RANK algorithms perform better at their original problem than the other conventional CADx methods. For the performance comparison on the two slice selection strategies of the lung CADx problem in the Table 1, Figs 3, 4 and 5, the involvement of all slices for the CURVE and RANK algorithms doesn’t help much. In particular, the RANK algorithm with ALL strategy performs slightly worse than the RANK model trained with SINGLE strategy. This may be because the features used in the RANK algorithm are not only ineffective for the nodule differentiation but also confusing with rich image contexts presented in various member slices of nodules for the classifier. Accordingly, it can be suggested that features for the conventional CADx framework may need to be specifically designed for each problem at hand. Thus far, there is barely general features that can be effective for all kinds of diseases and modalities.

Referring to Table 1 and relevant Figs 3 and 5, it can also be found that the nodule classification performance of the CURVE algorithm with either the ALL or SINGLE strategy is not significantly different to the performance attained by the MORPH features from experts’ drawings in ACC metrics. Therefore, it suggests that the elaboration of feature extraction for a CADx may sometimes not significantly outperform the simple morphological features commonly used in clinical practice. Accordingly, the design of effective features for a specific CADx problem can be an arduous problem. On the other hand, the SDAE-based CADx algorithm can do well on both problems with the same architecture setting and doesn’t require the explicit elaboration on feature extraction and feature relation establishment. Meanwhile, the SAE/SDAE models are equipped with a visualization mechanism of the learnt patterns encoded in the neurons at the unsupervised phase, as illustrated in Fig. 2, to facilitate the developer and even medical doctors to understand the machine learning model easier. These advantages and the natural end-to-end training manner of the deep learning methods can potentially benefit the inter-discipline application like CADx to enable people with engineering and clinical background work closer and come up with more effective solutions.

Although the SDAE algorithm cannot attain a performance as good as the earlier morphology-based framework4 on the breast dataset, it doesn’t need the image segmentation process to obtain a nodule/lesion boundary. The work4 requires a user to manually give the definition of a ROI and select one segmentation result from the five lesion boundary proposals generated by the segmentation algorithm. So far, there is no algorithm that can guarantee perfect automatic segmentation results for any object of interest and imaging modality. Note that image segmentation for pulmonary nodules in CT scans may sometimes include the nearby tissues, e.g., vessel and airway, into the segmentation results due to similar intensity distribution, whereas the accuracy of breast lesion segmentation in US images can be easily degraded with the presence of serious shadowing effect. The effect of combination of morphological and textural features on the conventional CADx has also been shown in the Tables 1 and 2. To avoid the user intervention on the segmentation process, the initialization and parameters of the DRLSE and GC methods are fixed. As it can be found in the Tables 1 and 2, the MORPH features from contours with the DRLSE and GC methods are not very helpful. It might be because the image segmentation results are imperfect and therefore the derived MORPH features are less reliable. Accordingly, this may reflect the conclusion of the work4 that the quality of image segmentation result matters for the CADx scheme based on the morphological features. For a better quality of morphological feature computing, the computed lesion/nodule boundaries usually require manual intervention, including model initialization13,15, parameter adjustment6,13,15, boundary refinement6 and selection4,23, to improve the segmentation results. Nevertheless, the user intervention will bias the computerized differentiation results because the process like boundary refinement and selection involves subjective judgment on the lesion/nodule. Accordingly, the pure objectiveness of computerized diagnosis may no longer hold. In contrast, the SDAE algorithm can circumvent this complex image segmentation step, but can still achieve an objective and satisfactory performance without any user intervention. The SDAE algorithm can potentially attain an even better performance if the data size of breast US is larger.

One of the major research lines on textural feature computation is based on the statistics of the Grey Level Co-occurrence Matrix (GLCM). To boost the differentiation performance, most promising texture-based CADx methods, e.g., the works12,19, decompose the raw image into independent components with a bank of filters, and then compute the GLCM from each component in multiple scales and orientations. With each GLCM, quite a few texture features can be further derived, e.g., 12 in the work19 and 14 in the work12. In such a framework, the intertwined combination of parameter settings on the filter bank, scale and orientation settings of the GLCM, and the number of derived features from each GLCM could lead to a large number of features. In this case, the feature selection step has to be applied19,20 to find a subset of the most useful features. Since the feature selection in itself is very tedious and needs to repeat the training process for many times, the whole CADx learning process will be rendered into a very long cycle. On the other hand, the training process of the deep architecture is relatively simple, but can achieve a better performance, see Fig. 1 for comparison.

In summary, the deep learning architecture holds the advantages of 1) automatic discovery of object features, 2) automatic exploration of feature hierarchy and interaction, 3) relatively simple end-to-end training process, and 4) systematic performance tuning. Although deep learning has been successfully applied to many image- and acoustic-related problems46, it is less explored in the context of CADx. In this study, the efficacy of a deep learning-based CADx scheme has been corroborated with extensive experiments. Comparing to the conventional CADx framework, the training procedure of deep learning is relatively simple but effective, without the need of explicit design of the problem-oriented features. To our best knowledge, this is the first deep-learning-based CADx study that demonstrates outperformance over the state-of-the-art CADx algorithms across different imaging modalities and diseases. This work may, thus, be referential for further deep learning studies on other CADx problems and the analysis of higher dimensional image data and images from heterogeneous imaging modalities for more accurate and reliable computerized diagnostic support.

Methods

Datasets

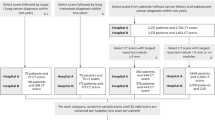

The methods were carried out by in “accordance” with the approved guidelines. All experimental protocols were approved by Taipei Veterans General Hospital and the Lung Image Database Consortium (LIDC)47,48. Informed consent was obtained from all subjects. The breast US images adopted here were acquired at Taipei Veterans General Hospital, Taipei, Taiwan4 with proper IRB approvals. Totally, 520 breast sonograms were scanned from 520 patients. The data involves 275 benign and 245 malignant lesions. All breast lesions were histopathologically proved by means of biopsy, mastectomy, etc. More details about the involved US breast lesions can be found in the work4. The Lung Image Database Consortium (LIDC)47,48 retrospectively collected lung CT image data from 1,010 patients (with appropriate local IRB approvals) at seven academic institutions with various CT machines in the United States. The slice thicknesses of the CT scans ranged diversely from 0.6 mm to 5 mm. 12 radiologists from 5 sites were involved in the annotation process. Nodules with diameters larger than 3 mm were further annotated with rating of malignancy by the radiologists. In this study, we randomly select 700 malignant and 700 benign nodules as the experimental dataset for data balanced. Specifically, the benign set includes the annotated nodules with scores of 1 and 2 in the “likelihood of malignancy” rating, whereas nodules scored as 4 and 5 are included in the malignant set.

SDAE-based CADx framework

The training of a SDAE-based CADx framework can be realized in two steps: the pre-training and supervised training steps. Figure 6 illustrates the flowchart of the SDAE-based CADx framework. To facilitate the training and differentiation tasks on the SDAE architecture, the image ROIs are resized into smaller patches of 28 × 28, where all pixels in each patch are treated as the input neurons. To preserve the original information, the resized scale factors of the two ROI dimensions and the aspect ratios of the original ROIs are later added into the input layer in the supervised training step.

The pixels of resized ROIs are fed into the network architecture at the pre-training step. The pre-trained network is then refined with the supervised training by adding three neurons carrying aspect ratio of the original ROI and also the resizing factors at the input layer. The final identification result can be made with the softmax classification.

At the pre-training step, the input ROIs are degraded with random corruption and then the noise-tolerance representative patterns for the lesion/tumor can be further identified with the network architecture. Meanwhile, higher semantic levels of the representative patterns and the composite/interaction relations from the low semantic levels can be further sought by constructing autoencoders layer by layer, see Fig. 2. The constructed architecture at the pre-training step can be served as reliable network initialization for the latter supervised training.

The supervised training of the SDAE-based CADx framework performs the fine-tuning on the network architecture to yield the desirable discriminative performance. The scaling factors in the x and y dimensions and the aspect ratio are treated as three individual neurons as new inputs, whereas two extra output neurons of benign and malignant classes are also added on the top of the network for the supervised training, see Fig. 6. The modified network is then equipped with the initialization from the pre-trained architecture, three new input neurons, and the two outputs. Afterward, the supervised training is performed with the conventional back-propagation for the fine-tuning of the whole network. With such network architecture and two steps of training, the feature extraction and selection can be systematically and jointly realized with less need of explicit and ad-hoc elaborations. The technical explanation about the SDAE model can be found in the first section of the supplementary.

Conventional CADx Algorithms for Comparison

Two texture-based CADx schemes for breast US19 and lung CT12 are implemented for comparison. Note that the morphological features are not considered here, since it needs an image segmentation process, which is very hard and often requires manual refinement on the automated segmentation results. On the other hand, textural features can be computed directly from the ROI. Accordingly the texture-based CADx schemes are relatively automatic and objective, and hence more suitable to serve as the comparison baselines. The implemented breast US CADx algorithm19, called RANK, is the state-of-the-art texture-based method for breast lesions classification. The RANK algorithm carries out the ranklet transform, which was shown to be robust to speckle noise, and to decompose the US image data into several independent image components. The GLCM-based texture features are computed from the ranklet components, whereas feature selection is conducted with the bootstrap method19,20 to find an effective subset of features for classification. We adopted the reported best selected features19 as the input to SVM to reach the final classification result for each US ROI.

For lung nodule differentiation, the latest lung CT CADx algorithm12, denoted as CURVE, is implemented. The CURVE algorithm applies the curvelet transform to decompose the raw image data into several sub-band components and then computes the GLCM on each component to derive texture features. To reduce feature dimensionality, the CURVE algorithm averages the textural features over all angle parameter settings of each GLCM for the later classification step with SVM. It is worth noting that the RANK and CURVE algorithms share some common steps such as image decomposition with transform techniques, computing of GLCM features, and SVM-based classification, though their feature selection and integration processes are different.

Classification with Clinical Features

To further compare the effectiveness of the SDAE-based CADx algorithm, the simple clinical (MORPH) features, which are commonly used as preliminary reference for differential diagnosis in clinical practice, are also implemented. Specifically, the size and diameter features of the breast and nodules are computed from the US images and CT scans, respectively. For pulmonary nodules, we compute the nodule volume, the maximum major diameter of the approximate ellipsoid of the nodule contour over all member slices, and the maximum area of the nodule over all member slices as the quantitative features. It is worth noting that the computed nodule volume can only approximate the real value due to the slice thickness effect and segmentation errors. The three quantitative features are further classified with SVM for the benignancy/malignancy classification. Similarly, we also compute the simple morphological features for the US breast lesions for comparison. The specific simple morphological features are the lesion area and the length of the major axis of the approximated ellipsoid of each breast lesion. These two features are further served as input of a SVM for the breast lesion classification. All the MORPH features are derived from manual outlines from experienced medical doctors and image segmentation results from the DRLSE level set43 and grow-cut44 methods.

Training and Testing of CADx Algorithms

Since the US breast data are 2D images, the training and testing of CADx algorithms is relatively simple. On the other hand, the image resolution of lung CT scan is anisotropic between the z (slice thickness) and x-y directions. The slice thickness varies widely. As the CT scan is sliced into 2D transversal views, the computation of a 3D feature on the z direction is less reliable than on the other x and y directions. In such a case, there will be an issue of slice selection for the representation of a nodule in the design of the CADx. For a pulmonary nodule, because in some member CT slices only small portions of the nodule are depicted, not every member CT slice of a nodule can be useful. Accordingly, the efficacy of the involvement of all CT slices for the lung CADx is unknown. Two slice selection, i.e., SINGLE and ALL, strategies are implemented in this study for the lung CT CADx problem. In SINGLE strategy, the middle slice of the nodule is selected as the representative sample. Each ROI sample stands for a distinctive nodule. In the ALL strategy, all member slices of each nodule are participating in the training and testing. In the training stage, all ROIs of the member slices from training nodules are treated as the training data. For the prediction of a testing nodule, a major voting scheme is implemented to reach the final classification result. Specifically, if more than half of the member slices are identified as malignant by the CADx model, the nodule will be regarded as a malignant nodule. In this study, the SINGLE and ALL strategies are implemented with the SDAE, CURVE, and RANK algorithms to illustrate the classification performance.

Additional Information

How to cite this article: Cheng, J.-Z. et al. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci. Rep. 6, 24454; doi: 10.1038/srep24454 (2016).

References

Doi, K. Computer-aided diagnosis in medical imaging: historical review, current status and future potential. Comput. Med. Imaging Graph. 31, 198–211 (2007).

van Ginneken, B., Schaefer-Prokop, C. M. & Prokop, M. Computer-aided diagnosis: how to move from the laboratory to the clinic. Radiology 261, 719–732 (2011).

Giger, M. L., Chan, H.-P. & Boone, J. Anniversary paper: history and status of CAD and quantitative image analysis: the role of medical physics and AAPM. Med. Phys. 35, 5799–5820 (2008).

Cheng, J.-Z. et al. Computer-aided US diagnosis of breast lesions by using cell-based contour grouping1. Radiology 255, 746–754 (2010).

Giger, M. L., Karssemeijer, N. & Schnabel, J. A. Breast image analysis for risk assessment, detection, diagnosis, and treatment of cancer. Annu. Rev. Biomed. Eng. 15, 327–357 (2013).

Joo, S., Yang, Y. S., Moon, W. K. & Kim, H. C. Computer-aided diagnosis of solid breast nodules: use of an artificial neural network based on multiple sonographic features. IEEE Trans. Med. Imag. 23, 1292–1300 (2004).

Chen, C.-M. et al. Breast Lesions on Sonograms: Computer-aided Diagnosis with Nearly Setting-Independent Features and Artificial Neural Networks 1. Radiology 226, 504–514 (2003).

Drukker, K., Sennett, C. & Giger, M. L. Automated method for improving system performance of computer-aided diagnosis in breast ultrasound. IEEE Trans. Med. Imag. 28, 122–128 (2009).

Awai, K. et al. Pulmonary Nodules: Estimation of Malignancy at Thin-Section Helical CT—Effect of Computer-aided Diagnosis on Performance of Radiologists 1. Radiology 239, 276–284 (2006).

McCarville, M. B. et al. Distinguishing Benign from Malignant Pulmonary Nodules with Helical Chest CT in Children with Malignant Solid Tumors 1. Radiology 239, 514–520 (2006).

Sluimer, I. C., van Waes, P. F., Viergever, M. A. & van Ginneken, B. Computer-aided diagnosis in high resolution CT of the lungs. Med. Phys. 30, 3081–3090 (2003).

Sun, T., Zhang, R., Wang, J., Li, X. & Guo, X. Computer-aided diagnosis for early-stage lung cancer based on longitudinal and balanced data. Plos ONE 8, e63559 (2013).

Way, T. W. et al. Computer-aided diagnosis of pulmonary nodules on CT scans: improvement of classification performance with nodule surface features. Med. Phys. 36, 3086–3098 (2009).

Armato III, S. G. & Sensakovic, W. F. Automated lung segmentation for thoracic CT: Impact on computer-aided diagnosis1. Acad. Radiol. 11, 1011–1021 (2004).

Way, T. W. et al. Computer-aided diagnosis of pulmonary nodules on CT scans: segmentation and classification using 3D active contours. Med. Phys. 33, 2323–2337 (2006).

Singh, S., Maxwell, J., Baker, J. A., Nicholas, J. L. & Lo, J. Y. Computer-aided classification of breast masses: Performance and interobserver variability of expert radiologists versus residents. Radiology 258, 73–80 (2011).

Sahiner, B. et al. Malignant and Benign Breast Masses on 3D US Volumetric Images: Effect of Computer-aided Diagnosis on Radiologist Accuracy 1. Radiology 242, 716–724 (2007).

Newell, D. et al. Selection of diagnostic features on breast MRI to differentiate between malignant and benign lesions using computer-aided diagnosis: differences in lesions presenting as mass and non-mass-like enhancement. Eur. Radiol. 20, 771–781 (2010).

Yang, M. et al. Robust Texture Analysis Using Multi-Resolution Gray-Scale Invariant Features for Breast Sonographic Tumor Diagnosis. IEEE Trans. Med. Imag. 32, 2262–2273 (2013).

Gómez, W., Pereira, W. & Infantosi, A. F. C. Analysis of co-occurrence texture statistics as a function of gray-level quantization for classifying breast ultrasound. IEEE Trans. Med. Imag. 31, 1889–1899 (2012).

Tourassi, G. D., Frederick, E. D., Markey, M. K. & Floyd Jr, C. E. Application of the mutual information criterion for feature selection in computer-aided diagnosis. Med. Phys. 28, 2394–2402 (2001).

Sahiner, B. et al. Computer-aided characterization of mammographic masses: accuracy of mass segmentation and its effects on characterization. IEEE Trans. Med. Imag. 20, 1275–1284 (2001).

Cheng, J.-Z. et al. ACCOMP: augmented cell competition algorithm for breast lesion demarcation in sonography. Med. Phys. 37, 6240–6252 (2010).

Chen, C.-M. et al. Cell-competition algorithm: A new segmentation algorithm for multiple objects with irregular boundaries in ultrasound images. Ultrasound Med. Biol. 31, 1647–1664 (2005).

Arbelaez, P., Maire, M., Fowlkes, C. & Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33, 898–916 (2011).

Sorensen, L., Shaker, S. B. & De Bruijne, M. Quantitative analysis of pulmonary emphysema using local binary patterns. IEEE Trans. Med. Imag. 29, 559–569 (2010).

Tourassi, G. D. Journey toward Computer-aided Diagnosis: Role of Image Texture Analysis 1. Radiology 213, 317–320 (1999).

Chang, R.-F., Wu, W.-J., Moon, W. K. & Chen, D.-R. Automatic ultrasound segmentation and morphology based diagnosis of solid breast tumors. Breast Cancer Res. Treat. 89, 179–185 (2005).

Huang, Y. L. et al. Computer-aided diagnosis using morphological features for classifying breast lesions on ultrasound. Ultrasound Obstet. Gynecol. 32, 565–572 (2008).

Alvarenga, A. V., Pereira, W. C., Infantosi, A. F. C. & Azevedo, C. M. Complexity curve and grey level co-occurrence matrix in the texture evaluation of breast tumor on ultrasound images. Med. Phy. 34, 379–387 (2007).

Kubota, T., Jerebko, A. K., Dewan, M., Salganicoff, M. & Krishnan, A. Segmentation of pulmonary nodules of various densities with morphological approaches and convexity models. Med. Image Anal. 15, 133–154 (2011).

Suk, H.-I., Lee, S.-W. & Shen, D. Latent feature representation with stacked auto-encoder for AD/MCI diagnosis. Brain Struct. Funct. 220, 841–859 (2015).

Zhang, W. et al. Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. NeuroImage 108, 214–224 (2015).

Shin, H.-C., Orton, M. R., Collins, D. J., Doran, S. J. & Leach, M. O. Stacked autoencoders for unsupervised feature learning and multiple organ detection in a pilot study using 4D patient data. IEEE Trans. Pattern Anal. Mach. Intell. 35, 1930–1943 (2013).

Chen, H. et al. Automatic Fetal Ultrasound Standard Plane Detection Using Knowledge Transferred Recurrent Neural Networks. Med. Image Comput. Comput. Assist. Interv. (MICCAI) 9349, 507–514 (2015).

Roth, H. et al. Improving Computer-aided Detection using Convolutional Neural Networks and Random View Aggregation. IEEE Trans. Med. Imag. in press, doi:10.1109/tmi.2015.2482920 (2016).

Seff, A. et al. Leveraging Mid-Level Semantic Boundary Cues for Automated Lymph Node Detection. Med. Image Comput. Comput. Assist. Interv. (MICCAI) 9350, 53–61 (2015).

Tajbakhsh, N., Gotway, M. B. & Liang, J. Computer-aided pulmonary embolism detection using a novel vessel-aligned multi-planar image representation and convolutional neural networks. Med. Image Comput. Comput. Assist. Interv. (MICCAI) 9350, 62–69 (2015).

Sermanet, P. et al. Overfeat: Integrated recognition, localization and detection using convolutional networks. arXiv preprint arXiv:1312.6229 (2013).

Ciompi, F. et al. Automatic classification of pulmonary peri-fissural nodules in computed tomography using an ensemble of 2D views and a convolutional neural network out-of-the-box. Med. Image Anal. 26, 195–202 (2015).

Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y. & Manzagol, P.-A. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 11, 3371–3408 (2010).

Larochelle, H., Bengio, Y., Louradour, J. & Lamblin, P. Exploring strategies for training deep neural networks. J. Mach. Learn. Res. 10, 1–40 (2009).

Li, C., Xu, C., Gui, C. & Fox, M. D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Imag. Proc. 19, 3243–3254 (2010).

Vezhnevets, V. & Konouchine, V. GrowCut: Interactive multi-label ND image segmentation by cellular automata. Proc. of Graphicon. 1, 150–156 (2005).

Martin Bland, J. & Altman, D. Statistical methods for assessing agreement between two methods of clinical measurement. The Lancet 327, 307–310 (1986).

Hinton, G. et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 29, 82–97 (2012).

Armato III, S. G. et al. Lung image database consortium: Developing a resource for the medical imaging research community 1. Radiology 232, 739–748 (2004).

Armato III, S. G. et al. The lung image database consortium (LIDC) and image database resource initiative (IDRI): a completed reference database of lung nodules on CT scans. Med. Phys. 38, 915–931 (2011).

Acknowledgements

This work was supported in part by the National Natural Science Funds of China (Nos 61501305, 61571304 and 81571758), National Science Council, Taiwan (No. NSC100-2221-E-002-030-MY3), the Shenzhen Basic Research Project (Nos JCYJ20150525092940982, JCYJ20130329105033277 and JCYJ20140509172609164), and the Shenzhen-Hong Kong Innovation Circle Funding Program (No. JSE201109150013A).

Author information

Authors and Affiliations

Contributions

J.Z.C. implemented the codes, designed the whole experiments, and drafted the manuscript. Y.H.C. provided the clinical data and perspectives. C.M.C. and D.S. advised the code development and revised the manuscript. N.D., J.Q., C.M.T., Y.C.C. and C.S.H. participated the idea discussion and reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Cheng, JZ., Ni, D., Chou, YH. et al. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci Rep 6, 24454 (2016). https://doi.org/10.1038/srep24454

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep24454

This article is cited by

-

A validation of an entropy-based artificial intelligence for ultrasound data in breast tumors

BMC Medical Informatics and Decision Making (2024)

-

Defining digital surgery: a SAGES white paper

Surgical Endoscopy (2024)

-

From CNN to Transformer: A Review of Medical Image Segmentation Models

Journal of Imaging Informatics in Medicine (2024)

-

A combined deep CNN-lasso regression feature fusion and classification of MLO and CC view mammogram image

International Journal of System Assurance Engineering and Management (2024)

-

Hybrid Whale Optimization and Canonical Correlation based COVID-19 Classification Approach

Multimedia Tools and Applications (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.