Abstract

The quest to characterize the neural signature distinctive of different basic emotions has recently come under renewed scrutiny. Here we investigated whether facial expressions of different basic emotions modulate the functional connectivity of the amygdala with the rest of the brain. To this end, we presented seventeen healthy participants (8 females) with facial expressions of anger, disgust, fear, happiness, sadness and emotional neutrality and analyzed amygdala’s psychophysiological interaction (PPI). In fact, PPI can reveal how inter-regional amygdala communications change dynamically depending on perception of various emotional expressions to recruit different brain networks, compared to the functional interactions it entertains during perception of neutral expressions. We found that for each emotion the amygdala recruited a distinctive and spatially distributed set of structures to interact with. These changes in amygdala connectional patters characterize the dynamic signature prototypical of individual emotion processing, and seemingly represent a neural mechanism that serves to implement the distinctive influence that each emotion exerts on perceptual, cognitive, and motor responses. Besides these differences, all emotions enhanced amygdala functional integration with premotor cortices compared to neutral faces. The present findings thus concur to reconceptualise the structure-function relation between brain-emotion from the traditional one-to-one mapping toward a network-based and dynamic perspective.

Similar content being viewed by others

Introduction

Affective neurosciences are concerned with understanding how emotions are represented in brain activity and how this translates into behaviour, thereby characterizing structure-to-function relations. Traditionally, lesion studies informed early localization approaches by outlining the deficits in emotion processing that follow focal brain damage1. Although lesion studies and neuropsychological observations remain fundamental for inferring structure-function relations and translating correlational observations to causation, functional neuroimaging has become the primary method for characterizing the brain basis of emotions in the past two decades2.

These functional magnetic resonance imaging (fMRI) investigations are improving our understanding of which individual brain regions respond to specific emotions. Moreover, the sizable fMRI literature accumulating in recent years has enabled quantitative meta-analyses to assess the consistency and specificity of the neural signature of emotion processing beyond idiosyncrasies of individual studies. Yet results are mixed, with some original studies and meta-analyses supporting the existence of discrete and non-overlapping neural correlates for different emotions3,4,5,6,7,8, and others reporting little evidence that discrete emotion categories can be localized in distinct and affect-specific brain networks2,9,10.

Common to most of these approaches scrutinizing the neural signatures of basic emotions is the reliance on methods that characterize the static response of individual areas, rather than their dynamic connectional changes. This focus on ‘disconnected’ brain structures, however, can be incomplete in representing how complex functions like emotion processing map into brain activity. In fact, the role of a given structure is partly determined by the dynamic interactions it entertains with other regions, thus shifting the focus toward inter-regional connectivity patterns11,12. Addressing the issue of how emotions are embedded in dynamic neural networks distributed across large spatial scales thus promises to re-conceptualize the longstanding debate on the neural bases of emotions according to a more neurobiologically plausible and contemporary view of the structure-function relation13,14,15. A given area can be involved in processing different emotions rather than only one single emotion16. Nevertheless, the critical neural signature that differentiates one specific emotion from the others can be found in the unique pattern of inter-regional connectivity and synchrony amidst areas. Additionally, emotion processing is a multi-componential phenomenon that entails different functions, from stimulus recognition to subjective experience or feelings, from the enactment of expressive and instrumental behaviours to memory formation17,18. Nevertheless, the bulk of the literature examining the neural correlates of emotion processing concentrated primarily on visual perception of facial expressions19,20, akin to the original studies addressing the existence of discrete basic emotions, which tested recognition of prototypical facial expressions21. Faces are indeed one of the most powerful and richest tools in the communication of social and affective signals22 and, besides their affective value, emotionally neutral faces elicit consistent response in emotion-sensitive structures, including the amygdala23,24.

The amygdala is indeed a key structure in the perception and response to emotional signals and has been classically linked to fear processing25,26,27,28. However, more recent findings have extended its functions to recognition of other emotions29,30 or to multiple processes beyond emotion perception, including memory formation, reward processing or social cognition31,32,33. For example, a recent activation likelihood estimation (ALE) meta-analysis reported that five out of the seven basic emotions (anger, disgust, fear, happiness and sadness) demonstrated consistent activation within the amygdala3. Amygdala voxels were found to contribute to the classification of happiness and disgust, in addition to fear, when multivariate pattern analysis (MVPA) techniques were applied to decode brain activity patterns induced by exposure to short movies or mental imagery6. Lastly, lesion studies in patients with selective bilateral amygdala damage reported that some of these patients may still be able to recognize fear from different stimuli, thus showing that fear perception does not invariably depend on the necessary contribution of the amygdala34,35,36,37.

Although this heterogeneity of functions in the amygdala has been initially taken as suggesting that emotions do not have a characteristic or unique neural signature2, these findings can more parsimoniously indicate that a one-to-one mapping between single instances of emotions and brain regions is too simplistic11. Current conceptions emphasize indeed how this functional diversity parallels the intricacy of amygdala circuitry and long-range connections, underscoring that brain regions do not have functions in isolation, but can fulfil multiple functions depending on the networks they belong to31,32,33. Understanding these context- and emotion-dependent changes of amygdala inter-regional connectional patterns is thus of crucial importance given its implication in virtually all psychiatric or neurological states characterized by social deficits, including addiction, autism or anxiety disorders38,39.

A host of techniques can be used to examine how spatially remote brain regions interact during emotion processing by gauging brain activity across time. One of the most popular and successful approaches for examining dynamic and task-related interactions between one region and the rest of the brain is to assess psychophysiological interactions (PPI)40,41. PPI analyses whether an experimental manipulation (e.g., exposure to an emotional stimulus) changes the coupling between a source region and other brain areas. Prior studies have investigated with PPI how exposure to one type of emotional stimuli (e.g., fearful faces) alters amygdala connectivity compared to face perception42, or context-based interregional covariance of amygdala response across broadly different tasks and domains such as emotion perception, attention, decision-making or face perception43,44. To our knowledge, however, the emotion-specific connectional fingerprint of human amygdala and its possible dynamic changes as a function of exposure to different facial expressions has not yet been investigated. To this end, we applied PPI analysis to examine whether the perception of angry, disgusted, fearful, happy and sad facial expressions significantly modulates amygdala co-activation with the rest of the brain compared to neutral faces, and whether these dynamic and emotion-specific changes in amygdala’s connectivity profile characterize distinctively the response to discrete emotional categories. Our focus on perception of facial expressions enabled a closer comparison with previous neuroimaging studies that also studied visual perception of the same emotional signals, albeit with different fMRI methods, and warranted robust signal change in the amygdala, which is a pre-requisite for the successful application of PPI analysis.

Results

A preliminary analysis assessed the percentage of blood oxygen level dependent (BOLD) signal change in the amygdala in response to the different stimulation conditions. Figure 1 reports the difference in amygdala fMRI response between each emotional expression and neutral faces. The fMRI data were entered in a repeated-measures Analysis of Variance (ANOVA) with the six-levels within-subjects factor ‘Facial Expressions’ (angry, disgusted, fearful, happy, sad, and neutral facial expressions). The difference in the mean fMRI signal change evoked in the amygdala by the six expressions was statistically significant [F(5, 80) = 3.05, p = 0.001]. Post-hoc comparisons revealed that amygdala activity increased significantly in response to all 5 emotional expressions, as compared to the neutral expression (p ≤ 0.012, by Fisher LSD test), whereas there was no difference in amygdala response amidst emotions (p ≥ 0.39).

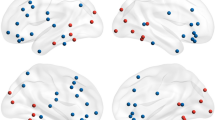

The PPI analysis of fMRI data revealed that neural activity in multiple cortical and subcortical areas co-varied, and was therefore functionally correlated, with the activity in the amygdala during observation of basic emotions compared with neutral expressions. All five basic emotions dynamically modulated amygdala interactions in a distinctive and category-specific manner, thereby carving a functionally specialized and spatially distributed network for each emotion studied, as displayed in Fig. 2 and further detailed in Tables 1, 2, 3, 4, 5.

The emotion-dependent connectivity pattern of the amygdala can be broadly grouped in five sets, based on their anatomical locations. The first set comprises anterior cortical midline regions, including the dorso-medial prefrontal cortex (dmPFC) and anterior cingulate cortex (ACC). Enhanced amygdala integration with these anterior midline regions characterizes the processing of happy expressions. The second set of inter-regional amygdala connectivity engages posterior area in the medial surface, such as the posterior cingulate cortex (PCC) and the precuneus (PCUN), which are uniquely associated with exposure to angry expressions. The third cluster consists of lateral prefrontal regions, anatomically connected with the amygdala via the uncinate fasciculus45, and also extends more posteriorly to premotor, motor and somatosensory areas. We found evidence of greater amygdala connectivity with the dorso-lateral prefrontal cortex (dlPFC) upon perception of sad faces, whereas the other structures in the lateral surface adjacent to the central sulcus are similarly recruited by all emotions and will be discussed below. The fourth set encompasses subcortical areas, such as the thalamus, the olfactory cortex and tubercles (OC), the nucleus accumbens (NAc), ventral pallidum (VP), and the hippocampus (HPC), all forming the signature pattern of amygdala synchrony during exposure to disgust, whereas the brain stem areas, especially in the pons; were unique of anger. The last set involves posterior visual structures, starting from striate cortex (V1) and extending both ventrally, to include the fusiform gyrus (FG) and the superior temporal sulcus (STS) that are anatomically connected with the amygdala via the longitudinal fasciculus46, and dorsally, up to the supramarginal gyrus (SMG), which are all distinctive of fear processing.

Finally, we investigated the possible presence of voxels that increase their synchrony with the amygdala in response to all five basic emotions, thus constituting the common core of amygdala functional network shared across emotions. As anticipated, 126 voxels matched this criterion and were localized in the left premotor (precentral gyrus PreCG, BA 6; 76 voxels, MNI coordinates: X = −44, Y = 2, X = 37) and rostral premotor cortex (middle frontal gyrus MFG, BA 8; 48 voxels, MNI coordinates: X = −48, Y = 18, X = 40), known to be involved in motor resonance47, action planning and emotional contagion48,49 (Fig. 3).

Discussion

Evidence for unique neural fingerprints associated with different emotions remains elusive. In recent years, pattern- or network-based perspectives have gained substantial traction in the quest to characterize emotion-specific brain responses, thereby shifting the focus from single brain structures to the connectivity between multiple regions2,6,9,10. While making great strides in overcoming traditional views of one-to-one correspondence between structures and functions, these methods also bear inevitable limitations. One is that they provide a static, instead of dynamic, picture of functional communication between areas. This is particularly noticeable when investigating affective phenomena, owing to the time- and context-dependent role of emotions in flexibly regulating adaptive interactions with our surrounding environment14. Therefore, the possibility to better define the neural signature that differentiates univocally each emotion from the others rests also with our ability to understand dynamic modulations of brain connectivity upon processing different emotions; an issue that has come under desultory scrutiny.

On the other hand, there is ample fMRI literature relying on interregional temporal covariance to profile amygdala functional connectivity31,32,44, including task-modulated and dynamic interactions42,43,44. However, amygdala connectional changes are usually compared across broad domains, such as emotion processing, attention, decision-making, or cognitive control, whereas dynamic modulation of functional interactions induced by different instances of basic emotions have not been examined systematically yet. The present study marks an initial attempt to bridge the gap between these two streams of investigation. In fact, we sought to determine if common or segregated patterns of amygdala co-activations exist amidst various emotions, as far as the perception of facial expressions is concerned. Our focus on PPI provides a dynamic perspective on emotion-specific modulation of amygdala connectivity and eschews possible confounds of more traditional methods to assess functional integration that can sample co-occurrence of activation between two or more brain regions but without linking it specifically to task demands or stimulus properties22,23,35. Moreover, we did not apply a priori models nor we restrained on any potential paths of functional amygdala interaction, rather adopting a data-driven and whole-brain approach to increase the robustness of analyses. Our results complement and extend with novel findings the current knowledge on the dynamic signature patterns of neural activity that characterize emotion perception, whilst also contributing a more composite perspective on amygdala functions that goes beyond traditional conceptions of it as fear hub.

First, all five emotional expressions enhanced amygdala activity compared to neutral faces, as reported in the preliminary analysis on fMRI signal change. Our findings are thus in keeping with the results of several neuroimaging investigations showing that the amygdala was comparably active in response to facial expressions of various basic emotions3,30, and that amygdala voxels contribute to the classification of positive as well as negative emotion categories, such as happiness fear and disgust6. The present results also concur with clinical studies reporting that direct simulation of the amygdala can induce either pleasant or unpleasant emotions50,51. Notably, neglecting the role of dynamic network changes in inter-regional connectivity has led to interpret comparable amygdala responsivity to different emotional expressions as evidence against the existence of unique neural signature for basic emotions2.

Second, the emotional expressions modulated amygdala connectivity with a widespread network of cortical and subcortical regions. When considered collectively across emotions, the array of spatially distributed regions interacting with the amygdala includes structures that have all been previously reported in studies on structural or functional amygdala connectivity7,26,31,32,42,44. Moreover, the spatially distributed network of structures interacting with the amygdala is in nice agreement with its connectional pattern, as previously defined by either structural or functional connectome, and parallel clusters and microstructural distinctions within amygdala sub-regions31,32,52. For example, the basolateral cluster is chiefly connected with, and thought to coordinate, lower and higher level visual and associative sensory areas supporting perception of social signals53. The central-medial cluster is connected to brain areas implicated in motor behaviour and response preparation, as well as visceral and somatosensory processing32. Lastly, the cluster in the superficial nuclei are connected and co-activated with brain areas involved in affiliative and avoidance behaviours or aversion, such as mesolimbic and vmPFC areas, in interoception, olfaction and vegetative processing, such as the NAc, thalamus and brainstem31,32. Our data, acquired with a 1.5 Tesla scanner, cannot afford the resolution required to discriminate between amygdala sub-nuclei, and future studies will investigate whether changes in amygdala functional connectivity emerge from the preferential recruitment of specific amygdala sub-regions in response to distinct emotions. Nevertheless, the present results suggest the importance of examining the concordance of structural, connectional and functional organization to better characterize the heterogeneity of amygdala functions.

Third, and most noteworthy, for each individual emotion studied the amygdala recruited a starkly distinguishable, but spatially distributed, set of structures to interact with. These changes in amygdala functional connectivity upon exposure to a variety of basic emotions characterize the dynamic signature prototypical of individual emotion processing, and seemingly represent a neural mechanism that serves to implement the distinctive influence that each emotion exerts on perceptual, cognitive, and motor responses. Clearly, the present results are limited to the perception of facial expressions. It remains open to further investigation to establish whether similar changes in amygdala interregional connectivity also occur in response to different classes of emotional stimuli54,55 or for other aspects of emotion processing beyond perception56. It also remains unknown whether gender differences affect amygdala’s dynamic connectional changes, as previously reported for amygdala activity57. Happiness and anger were the two emotions inducing higher coupling between amygdala and cortical structures in the medial surface. Nevertheless, there was a sharp distinction along the anterior-posterior axis, with happiness modulating amygdala interactions with left supragenual ACC and bilateral dmPFC, whereas anger enhanced connections with right PCC and PCUN bilaterally. ACC and dmPFC are anatomically interconnected between each other and with the amygdala58,59, their activity supports both empathic processes60 and reward-based decision making61, and their response increases when depressed subjects respond positively to pharmacological treatments62. Moreover, prior effective connectivity as well as meta-analytic fMRI studies found happiness-related activations in the supracallosal ACC and in the dmPFC, with comparable coordinates to those reported here4,7. Importantly, in a recent study examining the temporal dynamics of emotion processing, we found that the neuronal generators of EEG responses to happy stimuli were localized in the ACC and dmPFC14. This confirms with different methods that have higher temporal, but lower spatial resolution, the time-dependent interaction of these areas contingent upon processing joyful signals.

As for the integration of amygdala activity with posteromedial cortex during anger perception, the PCC and PCUN are reciprocally connected with other limbic and paralimbic structures, consistent with Papez original conceptualization of the PCC as an integral part of the system specialized for emotion processing63. These posteromedial areas are involved in detecting and responding to unexpected environmental events with high motivational value that can require a behavioural change64,65,66, as it is appropriate in the case of detecting an angry expression signalling a potentially harm67. More specifically, aggressive individuals with poor self-control show greater activity in the PCUN, whose metabolism correlates with negative emotionality68. Likewise, PCC and PCUN respond strongly when people are asked to imagine emotionally hurtful events that may induce anger69. Finally, increased anger-selective activation has been found in the PCC and PCUN of participants with preclinical Huntington’s disease, of which irritability in an important manifestation70. In this context, the anger-specific functional integration of amygdala and brain stem, particularly with the pons, is a quite novel finding, though coherent with human and animal models or aggression. In fact, two prior fMRI studies related anger with activity in the pons. Garfinkel et al.71 found that anger primes increase systolic blood pressure, speed up reaction times in participants with high anger traits, and enhances activity in the post, which is implicated in the regulation of sympathetic arousal. In another study72, angry reactions induced by unfair offers during social interactions activated clusters in the dorso-rostral pons that correspond to the anatomical location of the locus coeruleus73, a major source for noradrenalin in the brain, thus critically involved in arousal and stress responses74,75. Moreover, the raphe nuclei are also located in the region of the pons coherent with the loci co-activated with the amygdala in the present study. These nuclei control forebrain serotonin transmission and their modulation influences aggression76,77. Admittedly, there is a clear distinction between the perception of angry expressions, as examined in the present study, and the experience of anger, which was not assessed here. Notably, however, the coherence between the structures found here and those reported in prior findings addressing anger experience is in keeping with the view that neural systems related to the perception of emotional signals, on the one hand, and to the expression and experience of the same emotion, on the other, largely overlap47,49,78,79,80,81,82. Whether amygdala PPI varies similarly during perception with or without experience of the same emotion awaits future investigation.

Fear was the emotion inducing greater synchrony of amygdala activity with lower- as well as higher-level visual areas, including V1, MOG and areas along the ventral stream, such as the MTG, STS and STG. There is convincing evidence that the amygdala exerts a modulatory influence over visual areas in response to fearful stimuli83,84 and that this effect is abolished by amygdala lesions, despite visual areas remain functionally and structurally intact85. This mechanism enables the amygdala to provide bottom-up attentional modulation toward fearful stimuli, thereby increasing the likelihood that such signals automatically summon attention and reach awareness79,86. These functional effects are wired in the amygdala back-projections, as direct monosynaptic connections from amygdala neurons reach all cortical stages along the ventral visual system in a topographically-organized manner, including V187. Our results thus extend prior findings showing time- and emotion-dependent dynamic interactions of amygdala and visual areas specific for fear processing.

The perception of disgust enhanced the interaction between the amygdala and cortico-subcortical systems involved in motivating avoidant behaviours, olfaction and memory31. These interactions are compatible with the self-boundary function of disgust and its role in prioritizing immediate action generation through revulsion or gag reflex that protect the body from offensive or contaminating entities88,89. Amygdala enhanced connection with the olfactory (pyriform) cortex is clearly coherent with the ancestral origin of disgust that is anchored on chemical senses, and fits well with behavioural evidence that recognition of disgusted faces is improved by the presentation of an olfactory stimulus irrespective of its emotional valence90. Amygdala integration with the NAc and ventral pallidum (VP) finds direct support in rodent studies on disgust, showing that lesions and temporary inactivation of either structures generate intense sensory disgust91. The thalamus has been found to respond selectively to disgust in meta-analyses3,10 or to images of rotten food and mutilation92, whereas MVPA found that voxels within the thalamus, especially the ventral anterior nuclei, are particularly accurate in classifying disgust movies clips6. Therefore, amygdala connections with various thalamic regions during disgust processing probably serve to selectively amplify and dampen early sensory input to shape environmental perception, whereas enhanced integration with hippocampus (HPC) and parahippocampal cortex (PHC) is functional to store these stimulus-response contingencies in memory. In fact, current evidence seems to support a special role for memory in disgust processing, which possibly originates from its role in conditioned taste aversion. For example, disgusting stimuli enhance episodic memory and seem to have a special salience in memory relative to other equally arousing and negative emotions, such as fear93,94. Moreover, a recent fMRI study found that disgusting stimuli were the hardest to forget compared to fearful and sad stimuli, and increased amygdala activity along with the hippocampus95.

Sadness seems to underlie on tighter and almost exclusive amygdala-dlPFC interactions as compared to the wider network of amygdala co-activations during perception of the other emotions. Although the dlPFC subserves multiple functions and seems to regulate various emotional states96,97,98, recent studies implicate the dlPFC in sadness and depression99, especially during cognitive reappraisal and regulation of this emotion100. In such tasks, reappraisal minimizes the experience of negative affect after viewing sad stimuli and amygdala responses are dampened, possibly via the inhibitory function of the dlPFC101. Likewise, sadness-specific abnormalities and decreased response in the dlPFC have been reported in patients with bipolar disorder and depression, suggesting difficulties in integrating cognitive appraisal with sad experiences in such patients102,103. Whether the functional connectivity of the amygdala is truly limited to the interaction with dlPFC during sad perception requires further investigation with different stimuli, tasks, and methods of analysis, even though a previous meta-analysis also pointed to a relatively isolated system for sad processing10. Moreover, we previously observed with EEG that the temporal course of neural responses to sad images has a much slower and smoother temporal unfolding compared to other emotions14. Since the stimuli presented in this study were relatively brief, it may be that the optimal temporal interval to recruit more widespread co-activations with amygdala should extend beyond the time window of the current study, in terms of both stimulus exposure and ISI.

Fourth, and lastly, we investigated areas interacting with the amygdala in all five emotions with respect to neutral faces. All queried emotions enhanced indeed amygdala functional integration with premotor cortices. The coupling of amygdala and motor areas outlines the influence of different emotions in fostering action preparation and planning, as well as motor resonance. In fact, observing emotional stimuli increases motor excitability relative to neutral images81,82,104 and may reflect approach and avoidance preparation105, motor mimicry and emotional contagion48,49,54,106,107,108,109,110. Such functional interaction is consistent with the anatomical evidence indicating white matter connectivity between the amygdala and the motor regions in nonhuman primates111 and humans112.

In sum, our study demonstrates that anatomically distributed networks of amygdala interactions characterize the dynamic neural signature associated to the perception of individual facial expressions of emotions, and contributes to understand the functions of path of information flow through these interconnected regions. It also provides an initial example to use the ‘task connectome’ derived from PPI analyses as a systematic framework towards understanding the dynamic connectivity underpinning different emotional and cognitive functions or task contexts.

Methods

Participants

Seventeen right-handed participants (8 females) with normal or corrected-to-normal vision participated in this study (age M = 21.7, S.D. = 1.4). All subjects were screened for fMRI compatibility had no personal history of neurological or psychiatric illness, drug or alcohol abuse, or current medication. All experimental methods and protocols in the study were performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and approved by the bioethics committee of the University of Turin under the project ‘Neuropsychological bases of emotional and social perception’. All participants provided written informed consent also approved by the same committee.

Stimuli

The stimuli were taken from Ekman’s series113 and displayed facial expressions of anger, disgust, fear, happiness, sadness and emotional neutrality. A total of four different Caucasian actors (2 females) each expressing all 5 emotions and neutral faces were used, for a total of 24 images (6 conditions × 4 actors). All stimuli sustained a visual angle of 8° × 10.5° from a viewing distance of 50 cm from the screen of a 21-in LCD monitor and had a mean luminance of 15 cd/m2.

Procedure

The experiment consisted of an event-related design divided in four runs. In each run, lasting approximately 8 min, all 24 stimuli were presented randomly for a total of 96 trials and 16 repetitions of the same facial expression. Each trial started with a central fixation cross lasting for 1 second against a dark background, followed by stimulus presentation for 1 second. Inter-stimulus interval (ISI) varied randomly within 16–20 seconds time range during which the central cross remained present on the screen. A PC running E-prime controlled stimulus presentation.

Participants lay supine in the scanner with head movements minimized by an adjustable padded head holder. A colour LCD screen projected the stimuli onto a rear projection screen in the bore of the magnet. Participants viewed the screen via an angled mirror system. They were instructed to fixate the central cross during a passive exposure paradigm. This enabled us to record neural activity related to spontaneous emotion processing that was unaffected by spurious factors such as deliberate processing, top-down attentional modulation or action execution and button press. Otherwise, the interpretation of activations in motor and somatosensory cortices, or in the dorsal fronto-parietal attentional network, as predicted from previous studies, had been problematic if they had been concomitant upon these additional task demands. To verify that participants viewed the stimuli, after each run participants were asked whether they had paid attention to the stimuli. All participants responded positively after every run.

Data Acquisition

Data acquisition was performed on a 1.5 Tesla INTERA™ scanner (Philips Medical Systems) with a SENSE high-field, high-resolution (MRIDC) head coil that was optimized for functional imaging. The functional T2*-weighted images were acquired using echoplanar (EPI) sequences (TR/TE/flip angle = 2000 ms/50 ms/90°; FoV = 256 mm; acquisition matrix was 64 × 64; 19 axial slices slice with 5 mm thickness and 1 mm gap). A total of 240 volumes were acquired covering the whole brain. Two scans were added at the beginning of the functional scanning session and the data discarded to reach a steady-state magnetization before acquiring the experimental data.

In the same session, a set of three-dimensional high-resolution T1-weighted structural images was acquired for each participant using a Fast Field Echo (FFE) sequence (TR/TE/flip angle = 25 ms/7.9/30°; FoV = 256 mm; acquisition matrix was 256 × 256; 160 contiguous 1 mm sagittal slices; isotropic voxel size = 1 × 1 × 1 mm).

Preprocessing

Functional data were preprocessed using FSL 5.0.9 (www.fmrib.ox.ac.uk/fsl)114. Preprocessing steps included skull extraction using Brain Extraction Tool (BET – FSL), bulk and motion correction using rigid transformation (6 degree of freedom), spatial smoothing at 7 mm, high pass temporal filtering set to 128 seconds. Finally, functional and structural data were registered in standard space as follows. Boundary Based Registration approach was used to co-register each functional EPI into the correspondent T1-wighted image, and the same T1-weighted image was then co-registered into MNI152 2 mm3 using FMRIB’s Linear Image Registration Tool (FLIRT–FSL). All participants maintained head motion <3 mm for all scans.

The anatomical T1-weighted images were preprocessed using recon-all pipeline (FreeSurfer115) to retrieve anatomical parcellation and localize the regions of interest (ROIs) in the amygdale of both hemispheres in each subject. Attention was paid to warrant subject-specific anatomical fit of the ROIs. The FSL transformation matrices were then used to standardize into the MNI152 2 mm3 template the FreeSurfer parcellated brain of each participant with the localized amygdala ROIs. After standardization, the ROIs were combined into a single probabilistic map representing the spatial overlapping of the amygdale, as defined anatomically in individual subjects. The ROIs showed excellent spatial overlapping across all subjects and their volumes were consistent with the mean volumes reported in prior MRI and post- mortem studies, indicating a conservative volumetric definition of the amygdale116. Lastly, this map was further thresholded to include only voxels common to the ROIs of at least 14 participants (82%).

fMRI Signal Change in the Amygdala

Before entering fMRI data in the PPI analysis, we first assessed the response in the amygdala to the different facial expressions presented. As previously established for similar cases117, we extracted for each participant the time course of BOLD response from amygdala ROIs. The fMRI response was expressed as percentage of BOLD signal change from baseline, defined as the average activity over the whole time course, applying the following formula:

where S is the BOLD signal at each time point, and Sm is the average fMRI response over the whole time course. Lastly, the mean emotion-specific fMRI signal change was computed for each participant and expression independently (i.e., angry, disgusted, fearful, happy, sad, and neutral facial expressions), reflecting the mean peak of stimulus-evoked activity of all voxels in the ROI over a temporal window of 16 sec from stimulus onset.

PPI Analysis

PPI reveals how activity in a particular brain region (the amygdala in the present case) is differentially correlated (i.e., functionally interacts) with that in other brain regions depending on the experimental conditions (i.e., exposure to different facial expressions)40,41. Primarily, the mean time series data for the left and right amygdala activity were extracted from the ROIs for each subject and pooled together, thus creating the physiological regressor. To obtain the final PPI results, we implemented the FSL’s 3-level analysis approach. In the first level of the analysis, we designed a general linear model (GLM) approach including the 6 facial expressions of the experimental design (the psychological regressor) convolved with a double-gamma Hemodinamic Response Function (HRF) (zero-centered about the minimum and maximum value of the data) and the (demeaned) amygdala time course. Next, we set the interaction regressor (PPI) between the amygdala time course and the stimulus regressor modelling the 6 expressions118. The result of this interaction regressor is the emotion-dependent connectivity of the amygdala with each voxel of the brain. Lastly, we computed a GLM contrast comparing each emotion’s PPI against the PPI for neutral expressions. This enabled us to outline only the unique contribution single emotions by discounting the unspecific effects of face perception.

At the second level, a fixed-effects analysis combined the connectivity data of each subject across all the four runs. At the third level (group level analysis), the obtained contrasts (each emotion vs. neutral) for each subject were modelled for between-subjects variance using mixed effects (FLAME1 option), and the results were cluster-corrected for multiple comparisons at the whole-brain level of Z > 2, p < 0.05. A preliminary analysis did not reveal any significant gender difference in amygdala connections. Therefore, the data of male and female participants were considered together. The resulting voxel-wise contrast maps were then loaded into MATLAB environment using xjView toolbox (http://www.alivelearn.net/xjview). This enable us to localize anatomically the significant clusters via MNI-based template atlas, to identify active voxels common to all emotions (using the option “common region” in xjView), and to obtain the number of voxels in each these clusters as reported in Tables 1, 2, 3, 4, 5. For display purposes, the activation maps were uploaded in MRIcroGL and the 3D visualization option applied (http://www.mccauslandcenter.sc.edu/mricrogl/home).

Additional Information

How to cite this article: Diano, M. et al. Dynamic Changes in Amygdala Psychophysiological Connectivity Reveal Distinct Neural Networks for Facial Expressions of Basic Emotions. Sci. Rep. 7, 45260; doi: 10.1038/srep45260 (2017).

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

Feinstein, J. S. Lesion studies of human emotion and feeling. Current opinion in neurobiology 23, 304–309, doi: 10.1016/j.conb.2012.12.007 (2013).

Lindquist, K. A., Wager, T. D., Kober, H., Bliss-Moreau, E. & Barrett, L. F. The brain basis of emotion: a meta-analytic review. The Behavioral and brain sciences 35, 121–143, doi: 10.1017/S0140525X11000446 (2012).

Kirby, L. a. J. & Robinson, J. L. Affective mapping: An activation likelihood estimation (ALE) meta-analysis. Brain and cognition, doi: 10.1016/j.bandc.2015.04.006 (2015).

Murphy, F. C., Nimmo-Smith, I. & Lawrence, A. D. Functional neuroanatomy of emotions: a meta-analysis. Cognitive, affective & behavioral neuroscience 3, 207–233 (2003).

Phan, K. L., Wager, T., Taylor, S. F. & Liberzon, I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. NeuroImage 16, 331–348, doi: 10.1006/nimg.2002.1087 (2002).

Saarimaki, H. et al. Discrete Neural Signatures of Basic Emotions. Cerebral cortex 1–11, doi: 10.1093/cercor/bhv086 (2015).

Tettamanti, M. et al. Distinct pathways of neural coupling for different basic emotions. NeuroImage 59, 1804–1817, doi: 10.1016/j.neuroimage.2011.08.018 (2012).

Vytal, K. & Hamann, S. Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. Journal of cognitive neuroscience 22, 2864–2885, doi: 10.1162/jocn.2009.21366 (2010).

Kober, H. et al. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. NeuroImage 42, 998–1031, doi: 10.1016/j.neuroimage.2008.03.059 (2008).

Wager, T. D. et al. A Bayesian Model of Category-Specific Emotional Brain Responses. PLOS Computational Biology 11, e1004066, doi: 10.1371/journal.pcbi.1004066 (2015).

Pessoa, L. Understanding brain networks and brain organization. Physics of Life Reviews 11, 400–435, doi: 10.1016/j.plrev.2014.03.005 (2014).

Sporns, O. Contributions and challenges for network models in cognitive neuroscience. Nature neuroscience 17, 652–660, doi: 10.1038/nn.3690 (2014).

Cauda, F., Costa, T. & Tamietto, M. Beyond localized and distributed accounts of brain functions. Comment on “Understanding brain networks and brain organization” by Pessoa. Phys Life Rev 11, 442–443, doi: 10.1016/j.plrev.2014.06.018 (2014).

Costa, T. et al. Temporal and spatial neural dynamics in the perception of basic emotions from complex scenes. Social cognitive and affective neuroscience 9, 1690–1703, doi: 10.1093/scan/nst164 (2014).

Tamietto, M. & Morrone, M. C. Visual Plasticity: Blindsight Bridges Anatomy and Function in the Visual System. Current biology: CB 26, R70–73, doi: 10.1016/j.cub.2015.11.026 (2016).

Scarantino, A. Functional specialization does not require a one-to-one mapping between brain regions and emotions. The Behavioral and brain sciences 35, 161–162, doi: 10.1017/S0140525X11001749 (2012).

Dolan, R. J. Emotion, cognition, and behavior. Science 298, 1191–1194 (2002).

Kragel, P. A. & LaBar, K. S. Decoding the Nature of Emotion in the Brain. Trends in cognitive sciences 20, 444–455, doi: 10.1016/j.tics.2016.03.011 (2016).

Adolphs, R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav Cogn Neurosci Rev 1, 21–62 (2002).

D’Agata, F. et al. The recognition of facial emotions in spinocerebellar ataxia patients. Cerebellum 10, 600–610, doi: 10.1007/s12311-011-0276-z (2011).

Ekman, P. Facial expression and emotion. Am Psychol 48, 384–392 (1993).

Jack, Rachael E. & Schyns, Philippe G. The Human Face as a Dynamic Tool for Social Communication. Current Biology 25, R621–R634, doi: 10.1016/j.cub.2015.05.052 (2015).

Burra, N. et al. Amygdala activation for eye contact despite complete cortical blindness. The Journal of neuroscience: the official journal of the Society for Neuroscience 33, 10483–10489, doi: 10.1523/JNEUROSCI.3994-12.2013 (2013).

Fried, I., MacDonald, K. A. & Wilson, C. L. Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron 18, 753–765 (1997).

Diano, M., Celeghin, A., Bagnis, A. & Tamietto, M. Amygdala Response to Emotional Stimuli without Awareness: Facts and Interpretations. Frontiers in psychology 7, 2029, doi: 10.3389/fpsyg.2016.02029 (2017).

Whalen, P. J. & Phelps, E. A. The Human Amygdala (Guilford Press, New York, 2009).

LeDoux, J. E. The emotional brain (Simon & Shuster, 1996).

Weiskrantz, L. Behavioral changes associated with ablation of the amygdaloid complex in monkeys. J Comp Physiol Psychol 49, 381–391 (1956).

Phelps, E. A. & LeDoux, J. E. Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48, 175–187 (2005).

Sergerie, K., Chochol, C. & Armony, J. L. The role of the amygdala in emotional processing: a quantitative meta-analysis of functional neuroimaging studies. Neuroscience and biobehavioral reviews 32, 811–830, doi: S0149-7634(08)00007-9 [pii] 10.1016/j.neubiorev.2007.12.002 (2008).

Bickart, K. C., Hollenbeck, M. C., Barrett, L. F. & Dickerson, B. C. Intrinsic Amygdala-Cortical Functional Connectivity Predicts Social Network Size in Humans. Journal of Neuroscience 32, 14729–14741, doi: 10.1523/JNEUROSCI.1599-12.2012 (2012).

Bzdok, D., Laird, A. R., Zilles, K., Fox, P. T. & Eickhoff, S. B. An investigation of the structural, connectional, and functional subspecialization in the human amygdala. Human brain mapping 34, 3247–3266, doi: 10.1002/hbm.22138 (2013).

Robinson, J. L., Laird, A. R., Glahn, D. C., Lovallo, W. R. & Fox, P. T. Metaanalytic connectivity modeling: Delineating the functional connectivity of the human amygdala. Human brain mapping, doi: 10.1002/hbm.20854 (2009).

Atkinson, A. P., Heberlein, A. S. & Adolphs, R. Spared ability to recognise fear from static and moving whole-body cues following bilateral amygdala damage. Neuropsychologia 45, 2772–2782 (2007).

Becker, B. et al. Fear processing and social networking in the absence of a functional amygdala. Biological psychiatry 72, 70–77, doi: 10.1016/j.biopsych.2011.11.024 (2012).

de Gelder, B. et al. The role of human basolateral amygdala in ambiguous social threat perception. Cortex; a journal devoted to the study of the nervous system and behavior 52, 28–34, doi: 10.1016/j.cortex.2013.12.010 (2014).

Tsuchiya, N., Moradi, F., Felsen, C., Yamazaki, M. & Adolphs, R. Intact rapid detection of fearful faces in the absence of the amygdala. Nature neuroscience 12, 1224–1225, doi: nn.2380 [pii] 10.1038/nn.2380 (2009).

Kennedy, D. P. & Adolphs, R. The social brain in psychiatric and neurological disorders. Trends in cognitive sciences 16, 559–572, doi: 10.1016/j.tics.2012.09.006 (2012).

Koelkebeck, K., Uwatoko, T., Tanaka, J. & Kret, M. E. How culture shapes social cognition deficits in mental disorders: A review. Social neuroscience 1–11, doi: 10.1080/17470919.2016.1155482 (2016).

Friston, K. J. et al. Psychophysiological and modulatory interactions in neuroimaging. NeuroImage 6, 218–229, doi: 10.1006/nimg.1997.0291 (1997).

Gitelman, D. R., Penny, W. D., Ashburner, J. & Friston, K. J. Modeling regional and psychophysiologic interactions in fMRI: the importance of hemodynamic deconvolution. NeuroImage 19, 200–207 (2003).

Di, X., Huang, J. & Biswal, B. B. Task modulated brain connectivity of the amygdala: a meta-analysis of psychophysiological interactions. Brain structure & function, doi: 10.1007/s00429-016-1239-4 (2016).

Lapate, R. C. et al. Awareness of Emotional Stimuli Determines the Behavioral Consequences of Amygdala Activation and Amygdala-Prefrontal Connectivity. Scientific reports 6, 25826, doi: 10.1038/srep25826 (2016).

Smith, D. V., Gseir, M., Speer, M. E. & Delgado, M. R. Toward a cumulative science of functional integration: A meta-analysis of psychophysiological interactions. Human brain mapping 37, 2904–2917, doi: 10.1002/hbm.23216 (2016).

Thiebaut de Schotten, M., Dell’Acqua, F., Valabregue, R. & Catani, M. Monkey to human comparative anatomy of the frontal lobe association tracts. Cortex; a journal devoted to the study of the nervous system and behavior 48, 82–96, doi: 10.1016/j.cortex.2011.10.001 (2012).

Catani, M., Jones, D. K., Donato, R. & Ffytche, D. H. Occipito-temporal connections in the human brain. Brain: a journal of neurology 126, 2093–2107, doi: 10.1093/brain/awg203 (2003).

Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C. & Lenzi, G. L. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proceedings of the National Academy of Sciences of the United States of America 100, 5497–5502, doi: 10.1073/pnas.0935845100 (2003).

de Gelder, B., Snyder, J., Greve, D., Gerard, G. & Hadjikhani, N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences, USA 101, 16701–16706 (2004).

Van den Stock, J. et al. Cortico-subcortical visual, somatosensory, and motor activations for perceiving dynamic whole-body emotional expressions with and without striate cortex (V1). Proceedings of the National Academy of Sciences of the United States of America 108, 16188–16193, doi: 10.1073/pnas.1107214108 (2011).

Murray, R. J., Brosch, T. & Sander, D. The functional profile of the human amygdala in affective processing: insights from intracranial recordings. Cortex; a journal devoted to the study of the nervous system and behavior 60, 10–33, doi: 10.1016/j.cortex.2014.06.010 (2014).

Lanteaume, L. et al. Emotion induction after direct intracerebral stimulations of human amygdala. Cerebral cortex 17, 1307–1313, doi: 10.1093/cercor/bhl041 (2007).

Balderston, N. L., Schultz, D. H., Hopkins, L. & Helmstetter, F. J. Functionally distinct amygdala subregions identified using DTI and high-resolution fMRI. Social cognitive and affective neuroscience 10, 1615–1622, doi: 10.1093/scan/nsv055 (2015).

Aggleton, J. P. & Mishkin, M. In Biological Foundations of Emotion (ed. Plutchik, R. ) 281–299 (Academic Press, 1986).

de Gelder, B., Hortensius, R. & Tamietto, M. Attention and awareness each influence amygdala activity for dynamic bodily expressions-a short review. Frontiers in integrative neuroscience 6, 54, doi: 10.3389/fnint.2012.00054 (2012).

Van den Stock, J. et al. Neural correlates of body and face perception following bilateral destruction of the primary visual cortices. Frontiers in behavioral neuroscience 8, 30, doi: 10.3389/fnbeh.2014.00030 (2014).

Celeghin, A., de Gelder, B. & Tamietto, M. From affective blindsight to emotional consciousness. Consciousness and cognition, doi: 10.1016/j.concog.2015.05.007 (2015).

Hamann, S. Sex differences in the responses of the human amygdala. The Neuroscientist: a review journal bringing neurobiology, neurology and psychiatry 11, 288–293, doi: 10.1177/1073858404271981 (2005).

Petrides, M. & Pandya, D. N. Dorsolateral prefrontal cortex: comparative cytoarchitectonic analysis in the human and the macaque brain and corticocortical connection patterns. The European journal of neuroscience 11, 1011–1036 (1999).

Kim, M. J. et al. The structural and functional connectivity of the amygdala: from normal emotion to pathological anxiety. Behavioural brain research 223, 403–410, doi: 10.1016/j.bbr.2011.04.025 (2011).

Morelli, S. A. & Lieberman, M. D. The role of automaticity and attention in neural processes underlying empathy for happiness, sadness, and anxiety. Frontiers in human neuroscience 7, 160, doi: 10.3389/fnhum.2013.00160 (2013).

Bush, G. et al. Dorsal anterior cingulate cortex: a role in reward-based decision making. Proceedings of the National Academy of Sciences of the United States of America 99, 523–528, doi: 10.1073/pnas.012470999 (2002).

Mayberg, H. S. et al. Regional metabolic effects of fluoxetine in major depression: serial changes and relationship to clinical response. Biological psychiatry 48, 830–843 (2000).

Catani, M., Dell’acqua, F. & Thiebaut de Schotten, M. A revised limbic system model for memory, emotion and behaviour. Neuroscience and biobehavioral reviews 37, 1724–1737, doi: 10.1016/j.neubiorev.2013.07.001 (2013).

Hahn, B., Ross, T. J. & Stein, E. A. Cingulate activation increases dynamically with response speed under stimulus unpredictability. Cerebral cortex 17, 1664–1671, doi: 10.1093/cercor/bhl075 (2007).

Mohanty, A., Gitelman, D. R., Small, D. M. & Mesulam, M. M. The spatial attention network interacts with limbic and monoaminergic systems to modulate motivation-induced attention shifts. Cerebral cortex 18, 2604–2613, doi: 10.1093/cercor/bhn021 (2008).

Leech, R. & Sharp, D. J. The role of the posterior cingulate cortex in cognition and disease. Brain: a journal of neurology 137, 12–32, doi: 10.1093/brain/awt162 (2014).

Kret, M. E., Denollet, J., Grezes, J. & de Gelder, B. The role of negative affectivity and social inhibition in perceiving social threat: an fMRI study. Neuropsychologia 49, 1187–1193, doi: 10.1016/j.neuropsychologia.2011.02.007 (2011).

Alia-Klein, N. et al. Reactions to media violence: it’s in the brain of the beholder. PloS one 9, e107260, doi: 10.1371/journal.pone.0107260 (2014).

Ricciardi, E. et al. How the brain heals emotional wounds: the functional neuroanatomy of forgiveness. Frontiers in human neuroscience 7, 839, doi: 10.3389/fnhum.2013.00839 (2013).

Van den Stock, J. et al. Functional brain changes underlying irritability in premanifest Huntington’s disease. Human brain mapping 36, 2681–2690, doi: 10.1002/hbm.22799 (2015).

Garfinkel, S. N. et al. What the heart forgets: Cardiac timing influences memory for words and is modulated by metacognition and interoceptive sensitivity. Psychophysiology 50, 505–512, doi: 10.1111/psyp.12039 (2013).

Gilam, G. et al. Neural substrates underlying the tendency to accept anger-infused ultimatum offers during dynamic social interactions. NeuroImage 120, 400–411, doi: 10.1016/j.neuroimage.2015.07.003 (2015).

Keren, N. I., Lozar, C. T., Harris, K. C., Morgan, P. S. & Eckert, M. A. In vivo mapping of the human locus coeruleus. NeuroImage 47, 1261–1267, doi: 10.1016/j.neuroimage.2009.06.012 (2009).

Samuels, E. R. & Szabadi, E. Functional neuroanatomy of the noradrenergic locus coeruleus: its roles in the regulation of arousal and autonomic function part II: physiological and pharmacological manipulations and pathological alterations of locus coeruleus activity in humans. Current neuropharmacology 6, 254–285, doi: 10.2174/157015908785777193 (2008).

Samuels, E. R. & Szabadi, E. Functional neuroanatomy of the noradrenergic locus coeruleus: its roles in the regulation of arousal and autonomic function part I: principles of functional organisation. Current neuropharmacology 6, 235–253, doi: 10.2174/157015908785777229 (2008).

Audero, E. et al. Suppression of serotonin neuron firing increases aggression in mice. The Journal of neuroscience: the official journal of the Society for Neuroscience 33, 8678–8688, doi: 10.1523/JNEUROSCI.2067-12.2013 (2013).

Soiza-Reilly, M., Anderson, W. B., Vaughan, C. W. & Commons, K. G. Presynaptic gating of excitation in the dorsal raphe nucleus by GABA. Proceedings of the National Academy of Sciences of the United States of America 110, 15800–15805, doi: 10.1073/pnas.1304505110 (2013).

Kragel, P. A. & LaBar, K. S. Somatosensory Representations Link the Perception of Emotional Expressions and Sensory Experience. eNeuro 3, doi: 10.1523/ENEURO.0090-15.2016 (2016).

Tamietto, M. et al. Once you feel it, you see it: Insula and sensory-motor contribution to visual awareness for fearful bodies in parietal neglect. Cortex 62, 56–72, doi: 10.1016/j.cortex.2014.10.009 (2015).

Wicker, B. et al. Both of us disgusted in My insula: the common neural basis of seeing and feeling disgust. Neuron 40, 655–664 (2003).

Borgomaneri, S., Gazzola, V. & Avenanti, A. Motor mapping of implied actions during perception of emotional body language. Brain stimulation 5, 70–76, doi: 10.1016/j.brs.2012.03.011 (2012).

Borgomaneri, S., Vitale, F. & Avenanti, A. Early changes in corticospinal excitability when seeing fearful body expressions. Sci Rep 5, 14122, doi: 10.1038/srep14122 (2015).

Morris, J. S. et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain: a journal of neurology 121, 47–57 (1998).

Pourtois, G., Schettino, A. & Vuilleumier, P. Brain mechanisms for emotional influences on perception and attention: what is magic and what is not. Biological psychology 92, 492–512, doi: 10.1016/j.biopsycho.2012.02.007 (2013).

Vuilleumier, P., Richardson, M. P., Armony, J. L., Driver, J. & Dolan, R. J. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature neuroscience 7, 1271–1278 (2004).

Vuilleumier, P. How brains beware: neural mechanisms of emotional attention. Trends in cognitive sciences 9, 585–594 (2005).

Amaral, D. G., Behniea, H. & Kelly, J. L. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience 118, 1099–1120, doi: S0306452202010011 [pii] (2003).

Fessler, D. & Haley, K. Guarding the perimeter: The outside-inside dichotomy in disgust and bodily experience. Cognition and Emotion 20(1), 3–19 (2009).

Miller, S. B. Disgust: the gatekeeper emotion (Analytic Press, 2004).

Seubert, J. et al. Processing of disgusted faces is facilitated by odor primes: a functional MRI study. NeuroImage 53, 746–756, doi: 10.1016/j.neuroimage.2010.07.012 (2010).

Ho, C. Y. & Berridge, K. C. Excessive disgust caused by brain lesions or temporary inactivations: mapping hotspots of the nucleus accumbens and ventral pallidum. The European journal of neuroscience 40, 3556–3572, doi: 10.1111/ejn.12720 (2014).

Borg, C., de Jong, P. J., Renken, R. J. & Georgiadis, J. R. Disgust trait modulates frontal-posterior coupling as a function of disgust domain. Social cognitive and affective neuroscience 8, 351–358, doi: 10.1093/scan/nss006 (2013).

Chipchase, S. Y. & Chapman, P. Trade-offs in visual attention and the enhancement of memory specificity for positive and negative emotional stimuli. Q J Exp Psychol (Hove) 66, 277–298, doi: 10.1080/17470218.2012.707664 (2013).

Croucher, C. J., Calder, A. J., Ramponi, C., Barnard, P. J. & Murphy, F. C. Disgust enhances the recollection of negative emotional images. PloS one 6, e26571, doi: 10.1371/journal.pone.0026571 (2011).

Marchewka, A. et al. What Is the Effect of Basic Emotions on Directed Forgetting? Investigating the Role of Basic Emotions in Memory. Frontiers in human neuroscience 10, 378, doi: 10.3389/fnhum.2016.00378 (2016).

Ferrari, C., Lega, C., Tamietto, M., Nadal, M. & Cattaneo, Z. I find you more attractive … after (prefrontal cortex) stimulation. Neuropsychologia 72, 87–93, doi: 10.1016/j.neuropsychologia.2015.04.024 (2015).

Ferrari, C. et al. The Dorsomedial Prefrontal Cortex Plays a Causal Role in Integrating Social Impressions from Faces and Verbal Descriptions. Cerebral cortex 26, 156–165, doi: 10.1093/cercor/bhu186 (2016).

Etkin, A., Buchel, C. & Gross, J. J. The neural bases of emotion regulation. Nature reviews. Neuroscience 16, 693–700, doi: 10.1038/nrn4044 (2015).

Leem, Y. H., Yoon, S. S., Kim, Y. H. & Jo, S. A. Disrupted MEK/ERK signaling in the medial orbital cortex and dorsal endopiriform nuclei of the prefrontal cortex in a chronic restraint stress mouse model of depression. Neuroscience letters 580, 163–168, doi: 10.1016/j.neulet.2014.08.001 (2014).

Belden, A. C., Luby, J. L., Pagliaccio, D. & Barch, D. M. Neural activation associated with the cognitive emotion regulation of sadness in healthy children. Developmental cognitive neuroscience 9, 136–147, doi: 10.1016/j.dcn.2014.02.003 (2014).

Levesque, J. et al. Neural circuitry underlying voluntary suppression of sadness. Biological psychiatry 53, 502–510 (2003).

Brody, A. L., Barsom, M. W., Bota, R. G. & Saxena, S. Prefrontal-subcortical and limbic circuit mediation of major depressive disorder. Seminars in clinical neuropsychiatry 6, 102–112 (2001).

Deckersbach, T. et al. Episodic memory impairment in bipolar disorder and obsessive-compulsive disorder: the role of memory strategies. Bipolar disorders 6, 233–244, doi: 10.1111/j.1399-5618.2004.00118.x (2004).

Borgomaneri, S., Gazzola, V. & Avenanti, A. Temporal dynamics of motor cortex excitability during perception of natural emotional scenes. Social cognitive and affective neuroscience 9, 1451–1457, doi: 10.1093/scan/nst139 (2014).

Borgomaneri, S., Gazzola, V. & Avenanti, A. Transcranial magnetic stimulation reveals two functionally distinct stages of motor cortex involvement during perception of emotional body language. Brain structure & function 220, 2765–2781, doi: 10.1007/s00429-014-0825-6 (2015).

de Gelder, B. et al. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neuroscience and biobehavioral reviews 34, 513–527, doi: 10.1016/j.neubiorev.2009.10.008 (2010).

Gothard, K. M. The amygdalo-motor pathways and the control of facial expressions. Frontiers in neuroscience 8, 1–7, doi: 10.3389/fnins.2014.00043 (2014).

Lee, T. W., Josephs, O., Dolan, R. J. & Critchley, H. D. Imitating expressions: emotion-specific neural substrates in facial mimicry. Social cognitive and affective neuroscience 1, 122–135, doi: 10.1093/scan/nsl012 (2006).

Rauchbauer, B., Majdandzic, J., Hummer, A., Windischberger, C. & Lamm, C. Distinct neural processes are engaged in the modulation of mimicry by social group-membership and emotional expressions. Cortex; a journal devoted to the study of the nervous system and behavior 70, 49–67, doi: 10.1016/j.cortex.2015.03.007 (2015).

Van den Stock, J., Tamietto, M., Hervais-Adelman, A., Pegna, A. J. & de Gelder, B. Body recognition in a patient with bilateral primary visual cortex lesions. Biological psychiatry 77, e31–33, doi: 10.1016/j.biopsych.2013.06.023 (2015).

Avendano, C., Price, J. L. & Amaral, D. G. Evidence for an amygdaloid projection to premotor cortex but not to motor cortex in the monkey. Brain research 264, 111–117 (1983).

Grezes, J., Valabregue, R., Gholipour, B. & Chevallier, C. A direct amygdala-motor pathway for emotional displays to influence action: A diffusion tensor imaging study. Human brain mapping 35, 5974–5983, doi: 10.1002/hbm.22598 (2014).

Ekman, P. & Friesen, W. Pictures of facial affect (Consulting Psychologists Press, 1976).

Jenkinson, M., Beckmann, C. F., Behrens, T. E., Woolrich, M. W. & Smith, S. M. Fsl. NeuroImage 62, 782–790, doi: 10.1016/j.neuroimage.2011.09.015 (2012).

Reuter, M., Rosas, H. D. & Fischl, B. Highly accurate inverse consistent registration: a robust approach. NeuroImage 53, 1181–1196, doi: 10.1016/j.neuroimage.2010.07.020 (2010).

Chance, S. A., Esiri, M. M. & Crow, T. J. Amygdala volume in schizophrenia: post-mortem study and review of magnetic resonance imaging findings. The British journal of psychiatry: the journal of mental science 180, 331–338 (2002).

Tamietto, M. et al. Collicular vision guides non-conscious behavior. Journal of vision 8, 67a (2008).

McLaren, D. G., Ries, M. L., Xu, G. & Johnson, S. C. A generalized form of context-dependent psychophysiological interactions (gPPI): a comparison to standard approaches. NeuroImage 61, 1277–1286, doi: 10.1016/j.neuroimage.2012.03.068 (2012).

Acknowledgements

M.T., M.D., and A.C. are supported by a “Vidi” grant from the Netherlands Organization for Scientific Research (NWO) (grant 452-11-015), and by a FIRB – Futuro in Ricerca 2012 - grant from the Italian Ministry of Education University and Research (MIUR) (grant RBFR12F0BD).

Author information

Authors and Affiliations

Contributions

T.C., F.C. and G.G. conceived and designed the study, M-K.T., T.C., F.C. performed the testing and collected the data, S.D. contributed pulse sequences and data processing techniques, M.D., T.C., A.C., A.B. and M.T. analysed data, M.D., A.C. and M.T. interpreted data, M.D., L.W. and M.T. wrote the paper. All authors reviewed and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Diano, M., Tamietto, M., Celeghin, A. et al. Dynamic Changes in Amygdala Psychophysiological Connectivity Reveal Distinct Neural Networks for Facial Expressions of Basic Emotions. Sci Rep 7, 45260 (2017). https://doi.org/10.1038/srep45260

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep45260

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.