Stimuli Reduce the Dimensionality of Cortical Activity

- 1Department of Neurobiology and Behavior, State University of New York at Stony Brook, Stony Brook, NY, USA

- 2Graduate Program in Neuroscience, State University of New York at Stony Brook, Stony Brook, NY, USA

The activity of ensembles of simultaneously recorded neurons can be represented as a set of points in the space of firing rates. Even though the dimension of this space is equal to the ensemble size, neural activity can be effectively localized on smaller subspaces. The dimensionality of the neural space is an important determinant of the computational tasks supported by the neural activity. Here, we investigate the dimensionality of neural ensembles from the sensory cortex of alert rats during periods of ongoing (inter-trial) and stimulus-evoked activity. We find that dimensionality grows linearly with ensemble size, and grows significantly faster during ongoing activity compared to evoked activity. We explain these results using a spiking network model based on a clustered architecture. The model captures the difference in growth rate between ongoing and evoked activity and predicts a characteristic scaling with ensemble size that could be tested in high-density multi-electrode recordings. Moreover, we present a simple theory that predicts the existence of an upper bound on dimensionality. This upper bound is inversely proportional to the amount of pair-wise correlations and, compared to a homogeneous network without clusters, it is larger by a factor equal to the number of clusters. The empirical estimation of such bounds depends on the number and duration of trials and is well predicted by the theory. Together, these results provide a framework to analyze neural dimensionality in alert animals, its behavior under stimulus presentation, and its theoretical dependence on ensemble size, number of clusters, and correlations in spiking network models.

Introduction

Understanding the dynamics of neural activity and how it is generated in cortical circuits is a fundamental question in Neuroscience. The spiking activity of ensembles of simultaneously recorded neurons can be represented in terms of sequences of firing rate vectors, as shown e.g., in frontal (Abeles et al., 1995; Seidemann et al., 1996; Durstewitz et al., 2010), gustatory (Jones et al., 2007; Mazzucato et al., 2015), motor (Kemere et al., 2008), premotor and somatosensory cortex (Ponce-Alvarez et al., 2012). The dimension of each firing rate vector is equal to the number of ensemble neurons N and the collection of rate vectors across trials takes the form of a set of points in the N-dimensional space of firing rates. Such points may not fill the whole space, but be restricted to lie inside a lower-dimensional subspace (see Ganguli et al., 2008). Roughly, dimensionality is the minimal number of dimensions necessary to provide an accurate description of the neural dynamics. If ensemble neurons are independent of each other, neural activities at different times will scatter around in the space of firing rate, filling a large portion of the space. In this case, dimensionality will be maximal and equal to the size of the ensemble N. At the other extreme, if all neurons are strongly correlated, ensemble activity localizes along a line. In this case, dimensionality is minimal and equal to one. These simple examples suggest that dimensionality captures information about the structure of a cortical circuit and the functional relations among the simultaneously recorded neurons, such as their firing rates correlation computed over timescales of hundreds of milliseconds.

Different definitions of dimensionality have been introduced for different tasks and across neural systems (Ganguli et al., 2008; Churchland et al., 2010a; Abbott et al., 2011; Ganguli and Sompolinsky, 2012; Cadieu et al., 2013; Rigotti et al., 2013; Gao and Ganguli, 2015). Such measures of dimensionality can shed light on the underlying neural computation; for example, they can predict the onset of an error trial in a recall task (Rigotti et al., 2013), or can allow the comparison of classification accuracy between different brain areas (e.g., IT vs. V4) and synthetic algorithms (Cadieu et al., 2013). Here, we investigate a measure of dimensionality closely related to the firing rate correlations of simultaneously recorded neurons (Abbott et al., 2011); such correlations may provide a signature of feature-based attention (Cohen and Maunsell, 2009) and other top-down cognitive factors (Nienborg et al., 2012). We elucidate the dependence of dimensionality on experimental parameters, such as ensemble size and interval length, and we show that it varies across experimental conditions. We address these issues by comparing recordings of ensembles of neurons from the gustatory cortex (GC) of alerts rats to a biologically plausible network model based on neural clusters with recurrent connectivity. This model captures neural activity in GC during periods of ongoing and stimulus-evoked activity, explaining how the spatiotemporal dynamics of ensemble activity is organized in sequences of metastable states and how single-neuron firing rate distributions are modulated by stimulus presentation (Mazzucato et al., 2015). Here, we show that the same model expounds the observed dependence of dimensionality on ensemble size and how such dependence is reduced by the presentation of a stimulus. By comparing the clustered network model with a homogeneous network without clusters, we find that the clustered network has a larger dimensionality that depends on the number of clusters and the firing rate correlations among ensemble neurons. A simple theory explains these results and allows extrapolating the scaling of dimensionality to very large ensembles. Our theory shows that recurrent networks with clustered connectivity provide a substrate for high-dimensional neural representations, which may lead to computational advantages.

Methods

Experimental Procedures

Adult female Long Evans rats were used for this study (Samuelsen et al., 2012; Mazzucato et al., 2015). Animals received ad lib. access to food and water, unless otherwise mentioned. Movable bundles of 16 microwires attached to a “mini-microdrive” (Fontanini and Katz, 2006; Samuelsen et al., 2012) were implanted in GC (AP 1.4, ML ± 5 from bregma, DV –4.5 from dura). After electrode implantation, intra-oral cannulae (IOC) were inserted bilaterally (Phillips and Norgren, 1970; Fontanini and Katz, 2005). At the end of the surgery a positioning bolt for restraint was cemented in the acrylic cap. Rats were given at least 7 days for recovery before starting the behavioral procedures outlined below. All experimental procedures were approved by the Institutional Animal Care and Use Committee of Stony Brook University and complied with University, state, and federal regulations on the care and use of laboratory animals. More details can be found in Samuelsen et al. (2012).

Rats were habituated to being restrained and receiving fluids through IOCs, and then trained to self-deliver water by pressing a lever following a 75 dB auditory cue at a frequency of 4 KHz. The interval at which lever-pressing delivered water was progressively increased to 40 ± 3 s (ITI). During experimental sessions additional tastants were automatically delivered at random times near the middle of the ITI, at random trials and in the absence of the anticipatory cue. A computer-controlled, pressurized, solenoid-based system delivered ~40 μl of fluids (opening time ~40 ms) directly into the mouth through a manifold of 4 polymide tubes slid into the IOC. The following four tastants were delivered: 100 mM NaCl, 100 mM sucrose, 100 mM citric acid, and 1 mM quinine HCl. Water (~50 μl) was delivered to rinse the mouth clean through a second IOC 5 s after the delivery of each tastant. Each tastant was delivered for at least 6 trials in each condition. Upon termination of each recording session the electrodes were lowered by at least 150 μm so that a new ensemble could be recorded.

Evoked activity periods were defined as the interval after tastant delivery (time t = 0 in our figures) and before water rinse (time t = 5 s). Only trials in which the tastants were automatically delivered were considered for the analysis of evoked activity, to minimize the effects of cue-related expectations (Samuelsen et al., 2012). Ongoing activity periods were defined as the 5 s-long intervals at the end of each inter-trial period.

The behavioral state of the rat was monitored during the experiment for signs of disengagement. Erratic lever pressing, inconstant mouth movements and fluids dripping from the mouth indicated disengagement and led to the termination of the experiment. In addition, since disengagement from the task is also reflected in the emergence of high power μ oscillations in local field potentials, occurrences of such periods were removed offline and not analyzed further (Fontanini and Katz, 2008).

Data Analysis

Single neuron action potentials were amplified, bandpass filtered (at 300–8 KHz), digitized and recorded to a computer (Plexon, Dallas, TX). Single units of at least 3:1 signal-to-noise ratio were isolated using a template-matching algorithm, cluster cutting techniques and examination of inter-spike interval plots (Offline Sorter, Plexon, Dallas, TX). All data analyses and model simulations were performed using custom software written in Matlab (Mathworks, Natick, MA, USA), Mathematica (Wolfram Research, Champaign, IL), and C. Starting from a pool of 299 single neurons in 37 sessions, neurons with peak firing rate lower than 1 Hz (defined as silent) were excluded from further analysis, as well as neurons with a large peak around the 6–10 Hz in the spike power spectrum, which were considered somatosensory (Katz et al., 2001; Samuelsen et al., 2012; Horst and Laubach, 2013). Only ensembles with 3 or more simultaneously recorded neurons were further analyzed (167 non-silent, non-somatosensory neurons from 27 ensembles). We analyzed ongoing activity in the 5 s interval preceding either the auditory cue or taste delivery, and evoked activity in the 5 s interval following taste delivery in trials without anticipatory cue, wherein significant taste-related information is present (Jezzini et al., 2013).

Hidden Markov Model (HMM) Analysis

Here we briefly outline the procedure used in Mazzucato et al. (2015), see this reference and (Jones et al., 2007; Escola et al., 2011; Ponce-Alvarez et al., 2012) for further details. Under the HMM, a system of N recorded neurons is assumed to be in one of a predetermined number of hidden (or latent) states (Rabiner, 1989; Zucchini and MacDonald, 2009). Each state m is defined as a vector of N firing rates νi(m), i = 1, …, N, one for each simultaneously recorded neuron. In each state, the neurons were assumed to discharge as stationary Poisson processes (Poisson-HMM). We matched the model to the data segmented in 1-ms bins (see below). In such short bins, we found that typically at most one spike was emitted across all simultaneously recorded neurons. If more than one neuron fired an action potential in a given bin, only one (randomly chosen) was kept for further analysis (this only occurred in a handful of bins per trial; Escola et al., 2011). We denote by yi(t) the spiking activity of the i-th neuron in the interval [t, t + dt], yi(t) = 1 if the neuron emitted a spike and yi(t) = 0 otherwise. Denoting with St the hidden state of the ensemble at time t, the probability of having a spikes from neuron i in a given state m in the interval [t, t + dt] is given by .

The firing rates νi(m) completely define the states and are also called “emission probabilities” in HMM parlance. The emission and transition probabilities were found by maximization of the log-likelihood of the data given the model via the expectation-maximization (EM), or Baum-Welch, algorithm (Rabiner, 1989), a procedure known as “training the HMM.” For each session and type of activity (ongoing vs. evoked), ensemble spiking activity from all trials was binned at 1 ms intervals prior to training assuming a fixed number of hidden states M (Jones et al., 2007; Escola et al., 2011). For each given number of states M, the Baum-Welch algorithm was run 5 times, each time with random initial conditions for the transition and emission probabilities. The range of hidden states M for the HMM analyses were Mmin = 10 and Mmax = 20 for spontaneous activity, and Mmin = 10 and Mmax = 40 for evoked activity. Such numbers were based on extensive exploration of the parameter space and previous studies (Jones et al., 2007; Miller and Katz, 2010; Escola et al., 2011; Ponce-Alvarez et al., 2012; Mazzucato et al., 2015). For evoked activity, each HMM was trained on all four tastes simultaneously. Of the models thus obtained, the one with largest total likelihood M* was taken as the best HMM match to the data, and then used to estimate the probability of the states given the model and the observations in each bin of each trial (a procedure known as “decoding”). During decoding, only those hidden states with probability exceeding 80% in at least 50 consecutive bins were retained (henceforth denoted simply as “states”). State durations were approximately exponentially distributed with median duration 0.60 s (95% CIs: 0.07–4.70) during ongoing activity and 0.30 s (0.06–2.80) during evoked activity (Mazzucato et al., 2015).

The firing rate fits νi(m) in each trial were obtained from the analytical solution of the maximization step of the Baum-Welch algorithm,

Here, [yi(1), …, yi(T)] is the spike train of the i-th neuron in the current trial, and T is the total duration of the trial. rm(t) = P(St = m|y(1), …, y(T)) is the probability that the hidden state St at time t is m, given the observations.

Dimensionality Measure

We defined the dimensionality of the neural activity as

where the are the principal eigenvalues expressed as fractions of the total amount of variance explained, i.e., , where λj are the eigenvalues of the covariance matrix of the firing rates (see below).

The dimensionality can be computed exactly in some relevant special cases. The calculation is simplified by the observation that Equation (2) is equivalent to

where Cf is the true covariance matrix of the firing rate vectors, is the trace of matrix A, and . We consider in the following only the case of firing rates in equal bins, hence we can replace Cf with the covariance matrix of the spike counts C in the definition of d:

where for later convenience we have introduced the notation

Note that d does not depend on the distribution of firing rates, but only on their covariance, up to a common scaling factor.

Dimensionality in the case of uniform pair-wise correlations

When all the pair-wise correlations rij are identical, rij = ρ for all i ≠ j,

we have for i ≠ j, where is the spike count variance. In this case, we find from Equation (4) that

and the dimensionality, Equation (3), is given by

where

Note that since both aN and bN scale as N when N is large, in general for large N.

If all spike counts have equal variance, σi = σ, we find exactly :

and the dependence of d on the variance drops out. Note that for uncorrelated spike counts (ρ = 0) this formula gives d = N, whereas for any finite correlation we find the upper bound d = 1∕ρ2. For N > 1, the dimensionality is inversely related to the amount of pair-wise correlation ρ.

Consider the case where spike counts have variances drawn from a probability distribution with mean and variance , and the pair-wise correlation coefficients rij, for i ≠ j, are drawn from a distribution with mean E[rij] = ρ and variance . In such a case one can evaluate Equation (3) approximately by its Taylor expansion around the mean values of the quantities in Equation (4). At leading order in N one finds

where E[.] denotes expectation. To obtain this result we have used the definitions in Equation (4), from which

and the fact that, given a random vector Xi with mean μi and covariance Cij, and a constant symmetric matrix Aij, the expectation value of the quadratic form is

In the case of uncorrelated spike counts (ρ = 0, δρ = 0), dimensionality still depends linearly on the ensemble size N, but with a smaller slope compared to the case of equal variances (Equation 8 with ρ = 0).

Dimensionality in the Case of Neural Clusters

Given an ensemble of N neurons arranged in Q clusters (motivated by the model network described later in section “Spiking neuron model”), we created ensembles of uncorrelated spike trains for N ≤ Q and correlated within each cluster for N > Q. Thus, if N ≤ Q the correlation matrix is the N × N identity matrix. If N > Q, the (Q + 1)th neuron was added to the first cluster, with correlation ρ with the other neuron of the cluster, and uncorrelated to the neurons in the remaining clusters. The (Q + 2)th neuron was added to the second cluster, with correlation ρ with the other neuron of the second cluster, and uncorrelated to the neurons in the remaining clusters, and so on. Similarly, the (2Q + p)th neuron (p ≤ Q) was added to the p-th cluster, with pair-wise correlation ρ with the other neurons of the same cluster, but no correlation with the neurons in the remaining clusters; and so on. In general, for N = mQ + p neurons (where is the largest integer smaller than ), the procedure picked m + 1 neurons per cluster for the first p cluster and m neurons per cluster for the remaining Q − p clusters, with uniform pair-wise correlations ρ in the same cluster while neurons from different clusters were uncorrelated. The resulting correlation matrix r was block diagonal

where each of the Q blocks contains the correlations of neurons from the same cluster. Inside each block Ri, the off-diagonal terms are equal to the uniform within-cluster correlation ρ:

The first p blocks have size (m + 1) × (m + 1) and the last Q − p blocks have size m × m, so that (m + 1)p + m(Q − p) = N. The remaining elements of matrix r (representing pair-wise correlations of neurons belonging to different clusters) were all zero. Recalling that Cij = rij σi σj, one finds Tr(C) = pbm + 1 + (Q − p)bm and , where an and bn are defined in Equation (6), from which one obtains

In the approximation where all neurons have the same variance this simplifies to

Recall that in the formulae above m and p depend on N. For finite ρ, Equation (13) predicts the bound d ≤ Q∕ρ2 for any N > 1, with this value reached asymptotically for large N. When single neuron variances are drawn from a distribution with mean and variance , an expression for the dimensionality can be obtained from Equation (12) at leading order in the expectation values of the quantities in Equation (4) (not shown), with a procedure similar to that used to obtain Equation (9).

Pair-Wise Correlations

Given neuron i and neuron j's spike trains, we computed the spike count correlation coefficient rij

where S is the sample covariance matrix of the spike counts estimated as

where ni(b, s) is the spike count of neuron i in bin b and trial s. The sum goes over all Nb bins and over all NT trials in a session, whereas < ni > is the average across trials and bins for neuron i. In the main text and figures we present results obtained with a bin size of 200 ms, but have performed the same analyses with bin sizes varying from 10 ms to 5 s (see Results for details).

Significance of the correlation was estimated as follows (Renart et al., 2010): Nshuffle = 200 trial-shuffled correlation coefficients were computed, then a p-value was determined as the fraction of shuffled coefficients whose absolute value exceeded the absolute value of the experimental correlation, . For example, a correlation r was significant at p = 0.05 confidence level if no more than 10 shuffled correlation coefficients out of 200 exceeded r.

The pair-wise correlations of firing rates vectors computed in bins of fixed duration T were given by Equation (14) with ni(b, s) replaced by ni(b, s)∕T. Instead, correlations of firing rates vectors inside hidden states (which have variable duration) were estimated after replacing ni(b, s) in Equation (14) with νi(m, s), the firing rate of neuron i in state m in trial s. For each trial s, this quantity was computed according to Equation (1).

Estimation of Dimensionality

The eigenvalues λj in Equation (2) were found with a standard Principal Component Analysis (PCA) of the set of all firing rate vectors (Chapin and Nicolelis, 1999). The firing rate vectors were obtained via the HMM analysis (see Equation 1); all data from either ongoing or evoked activity were used. For the analysis of Figure 3E, where the duration and number of trials were varied, only the firing rate vectors of the HMM states present in the given trial snippet were used (even if present for only a few ms). When firing rate vectors in hidden states were not available (mainly, in “shuffled” datasets and in asynchronous homogeneous networks, see below for details), the firing rates were computed as spike counts in T = 200 ms bins divided by T, ni(b, s)∕T, where ni(b, s) is as defined in Equation (14) (Figures 3F,G, 6E, 7D, 9A). Dimensionality values were averaged across 20 simulated sessions for each ensemble size N; in each session, 40 trials of 5 s duration, resulting in NT = 1, 000 bins, were used (using bin widths of 50–500 ms did not change the results). Note that for the purpose of computing the dimensionality (Equation 3), it is equivalent to use either the binned firing rate ni(b, s)∕T or the spike count ni(b, s).

In our data, d roughly corresponded to the number of principal components explaining between 80 and 90% of the variance. However, note that all eigenvalues are retained in our definition of dimensionality given in Equation (2) above.

Shuffled Datasets

The dimensionality of the data as a function of ensemble size N was validated against surrogate datasets constructed by shuffling neurons across different sessions while matching the empirical distribution of ensemble sizes. Comparison analyses between empirical and shuffled ensembles were trial-matched using the minimal number of trials per condition across ensembles, and then tested for significant difference with the Mann-Whitney test on samples obtained from 20 bootstrapped ensembles. Neurons whose firing rate variance exceeded the population average by two standard deviations were excluded (8/167 of non-silent, non-somatosensory neurons).

Dependence on the Number of Trials: Simulations (Figures 7E, 8A)

The estimate of d from data depends on the number and duration of the trials (Figure 3E and Equation 16 below). To investigate this phenomenon in a simple numerical setting we generated N × NT “nominal” firing rates, thought of as originating from N neurons, each sampled NT times (trials). The single firing rates were sampled according to a log-normal distribution with equal means and covariance leading to Equation (7), i.e., , with δij = 1 if i = j, and zero otherwise (note that the actual distribution used is immaterial since the dimensionality only depends on the covariance matrix, see Equation 3). We considered the two cases of equal variance for all ensemble neurons, σi = σ for all i (Figure 8A) or variances σi sampled from a log-normal distribution (Figure 8A and “+” in Figure 7E). The same N and NT as used for the analysis of the model simulations in Figure 7D were used (where the “trials” were NT bins of 200 ms in 40 intervals of 5 second duration for each ensemble size N). The covariance of the data thus generated was estimated according to Equation (14), based on which the dimensionality Equation (3) was computed. The estimated dimensionality depends on N and NT and was averaged across 100 values of d, each obtained as explained above. Note that in this simplified setting increasing the duration of each trial is equivalent to adding more trials, i.e., the effect of having a trial 400 ms long producing 2 firing rates (one for each 200 ms bin) is equivalent to having two trials of 200 ms duration. In the general case, the effect of trial duration on d will depend on how trial duration affects the variance and correlations of the firing rates.

Dependence on the Number of Trials: Theory

The dependence of dimensionality on the number of trials can be computed analytically under the assumption that N ensemble neurons generate spike counts ni, for i = 1, …, N, distributed according to a multivariate Gaussian. Since we are interested in the spike-count covariance Equation (14), we can assume the spike-count distribution to have zero mean and true covariance Cij. The matrix , where is the covariance matrix Equation (14) sampled from NT trials, is distributed according to a Wishart distribution WN(Cij, NT − 1) with NT − 1 degrees of freedom (Mardia et al., 1979). Since the variance of the Wishart distribution,

is proportional to NT, we obtain the variance of the entries of the sample covariance as

to be used in the estimator of d (from Equation 3)

where are given by Equation (4) with C replaced by S. With a calculation similar to that used to obtain Equation (9), to leading order in N and NT one finds

with

where we also used Equations (10) and (11), with and , for i ≠ j. In conclusion, one finds

Model Fitting

The dependence of the data's dimensionality on ensemble size N was fitted by a straight line via standard least-squares,

separately for ongoing and evoked activity (Figures 3B–D, 6B–D). Comparison between the dimensionality of evoked and ongoing activity was carried out with a 2-way ANOVA with condition (evoked vs. ongoing) and ensemble size (N) as factors. Since d depends on the number and duration of the trials used to estimate the covariance matrix (Figure 3E and Equation 16), we matched both the number of trials and trial length in comparisons of ongoing and evoked dimensionality. If multiple tastes were used, the evoked trials were each matched to a random subset of an equal number of ongoing trials.

The dependence of dimensionality d on ensemble size N in a surrogate dataset of Poisson spike trains with mean pairwise correlation ρ (generated according to the algorithm described in the next section) was modeled as Equation (16) with δρ2 = αρ2 and δσ4 = σ4 = β (Figure 7D, dashed lines); NT was fixed to 1000 (40 trials of 5 s each, segmented in 200 ms bins). The parameters α, β were tuned to fit all Poisson trains simultaneously on datasets with N = 5, 10, …, 100 and ρ = 0, 0.01, 0.05, 0.1, 0.2, with 20 ensembles for each value (Figure 7D; only the fits for ρ = 0, 0.1, 0.2 are shown). A standard non-linear least-squares procedure was used (Holland and Welsch, 1977).

Generation of Correlated Poisson Spike Trains

Ensembles of independent and correlated Poisson spike trains were generated for the analysis of Figure 7. Ensembles of independent stationary Poisson spike trains with given firing rates νi were generated by producing their interspike intervals according to an exponential distribution with parameter νi. Stationary Poisson spike trains with fixed pairwise correlations (but no temporal correlations) were generated according to the method reported in Macke et al. (2009), that we briefly outline below.

We split each trial into 1 ms bins and consider the associated binary random variable Xi(t) = 1 if the i-th neuron emitted a spike in the t-th bin, and Xi(t) = 0 if no spike was emitted. These samples were obtained by first drawing a sample from an auxiliary N-dimensional Gaussian random variable ~ (γ, Λ) and then thresholding it into 0 and 1: Xi = 1 if i > 0, and Xi = 0 otherwise. Here, γ = {γ1, γ2, …, γN} is the mean vector and Λ = {Λij} is the covariance matrix of the N-dimensional Gaussian variable . For appropriately chosen parameters γi and Λij the method generates correlated spike trains with the desired firing rates νi and pairwise spike count correlation coefficients rij.

The prescription for γi and Λij is most easily expressed as a function of the desired probabilities μi of having a spike in a bin of width dt, μi = P(Xi(t) = 1), and the pairwise covariance cij of the random binary vectors Xi(t) and Xj(t), from which γi and Λij can be obtained by inverting the following relationships:

Here, Φ(x) is the cumulative distribution of a univariate Gaussian with mean 0 and variance 1 evaluated at x, and Φ2(x, y, Λ) is the cumulative distribution of a bivariate Gaussian with means 0, variances 1 and covariance Λ evaluated at (x, y) (note that the distributions Φ and Φ2 are unrelated to the N-dimensional Gaussian ~ (γ, Λ)). Without loss of generality we imposed unit variances for i, i.e., Λii = 1.

We related the spike probabilities μi to the firing rates νi as , with (1 − μi) being the probability of no spikes in the same bin. When dt approaches zero, μi ≈ νidt and the spike trains generated as vectors of binary random variables by sampling ~ (γ, Λ) will approximate Poisson spike trains (dt = 1 ms bins were used). In order to have a fair comparison with the data generated by the spiking network model (described in the next section), the mean firing rates of the Poisson spike trains were matched to the average firing rates obtained from the simulated data.

Since γ and Λ were the same in all bins, values of Xi(t) and Xi(s) were independent for t ≠ s (i.e., the spike trains had no temporal correlations). As a consequence, the random binary vectors have the same pair-wise correlations as the spike counts, and the cij are related to the desired rij by , where μi(1 − μi) is the variance of Xi. See Macke et al. (2009) for further details.

Spiking Network Model

We modeled the data with a recurrent spiking network of N = 5000 randomly connected leaky integrate-and-fire (LIF) neurons, of which 4000 excitatory (E) and 1000 inhibitory (I). Connection probability pβα from neurons in population α ∈ E, I to neurons in population β ∈ E, I were pEE = 0.2 and pEI = pIE = pII = 0.5; a fraction f = 0.9 of excitatory neurons were arranged into Q different clusters, with the remaining neurons belonging to an unstructured (“background”) population (Amit and Brunel, 1997). Synaptic weights Jβα from neurons in population α ∈ E, I to neurons in population β ∈ E, I scaled with N as , with jβα constants having the following values (units of mV): jEI = 3.18, jIE = 1.06, jII = 4.24, jEE = 1.77. Within an excitatory cluster synaptic weights were potentiated, i.e., they took average values of 〈J〉+ = J+jEE with J+ > 1, while synaptic weights between units belonging to different clusters were depressed to average values 〈J〉− = J−jEE, with J− = 1 − γf(J+ − 1) < 1, with γ = 0.5. The latter relationship between J+ and J− helps to maintain balance between overall potentiation and depression in the network (Amit and Brunel, 1997).

Below spike threshold, the membrane potential V of each LIF neuron evolved according to

with a membrane time constant τm = 20 ms for excitatory and 10 ms for inhibitory units. The input current was the sum of a recurrent input Irec, an external current Iext representing an ongoing afferent input from other areas, and an external stimulus Istim representing e.g., a delivered taste during evoked activity only. In our units, a membrane capacitance of 1nF is set to 1. A spike was said to be emitted when V crossed a threshold Vthr, after which V was reset to a potential Vreset = 0 for a refractory period of τref = 5 ms. Spike thresholds were chosen so that, in the unstructured network (i.e., with J+ = J− = 1), the E and I populations had average firing rates of 3 and 5 spikes/s, respectively (Amit and Brunel, 1997). The recurrent synaptic input to unit i evolved according to the dynamical equation

where was the arrival time of k-th spike from the j-th pre-synaptic unit, and τs was the synaptic time constant (3 and 2 ms for E and I units, respectively), resulting in an exponential post-synaptic current in response to a single spike, , where Θ(t) = 1 for t ≥ 0, and Θ(t) = 0 otherwise. The ongoing external current to a neuron in population α was constant and given by Iext = Nextpα0Jα0 νext, where Next = nEN, pα0 = pEE, with jE0 = 0.3, jI0 = 0.1, and νext = 7 spikes/s. During evoked activity, stimulus-selective units received an additional input representing one of the four incoming stimuli. The stimuli targeted combinations of neurons as observed in the data. Specifically, the fractions of neurons responsive to n = 1, 2, 3 or all 4 stimuli were 17% (27/162), 22% (36/162), 26% (42/162), and 35% (57/162) (Jezzini et al., 2013; Mazzucato et al., 2015). Each stimulus had constant amplitude νstim ranging from 0 to 0.5 νext. In the following we measure the stimulus amplitude as percentage of νext (e.g., “10%” corresponds to νstim = 0.1 νext). The onset of each stimulus was always t = 0, the time of taste delivery. The stimulus current to a unit in population α was constant and given by Istim = Nextpα0Jα0 νstim.

Mean Field Analysis of the Model

The stationary states of the spiking network model in the limit of large N were found with a mean field analysis (Amit and Brunel, 1997; Brunel and Hakim, 1999; Fusi and Mattia, 1999; Curti et al., 2004; Mazzucato et al., 2015). Under typical conditions, each neuron of the network receives a large number of small post-synaptic currents (PSCs) per integration time constant. In such a case, the dynamics of the network can be analyzed under the diffusion approximation within the population density approach. The network has α = 1, …, Q + 2 sub-populations, where the first Q indices label the Q excitatory clusters, α = Q + 1 labels the “background” units, and α = Q + 2 labels the homogeneous inhibitory population. In the diffusion approximation (Tuckwell, 1988; Lánský and Sato, 1999; Richardson, 2004), the input to each neuron is completely characterized by the infinitesimal mean μα and variance of the post-synaptic potential (see Mazzucato et al., 2015 for the expressions of the infinitesimal mean and variance for all subpopulations).

Parameters were chosen so that the network with J+ = J− = 1 (where all E → E synaptic weights are equal) would operate in the balanced asynchronous regime (van Vreeswijk and Sompolinsky, 1996, 1998; Renart et al., 2010), where incoming contributions from excitatory and inhibitory inputs balance out, neurons fire irregular spike trains with weak pair-wise correlations.

The unstructured network has only one dynamical state, i.e., a stationary point of activity where all E and I neurons have constant firing rate νE and νI, respectively. In the structured network (where J+ > 1), the network undergoes continuous transitions among a repertoire of states, as shown in the main text. To avoid confusion between network activity states and HMM states, we refer to the former as network “configurations” instead of states. Admissible networks configurations must satisfy the Q + 2 self-consistent mean field equations (Amit and Brunel, 1997)

where is the firing rate vector and is the current-to-rate response function of the LIF neurons. For fast synaptic times, i.e., is well approximated by (Brunel and Sergi, 1998; Fourcaud and Brunel, 2002)

where

where is the square root of the ratio of synaptic time constant to membrane time constant, and . This theoretical response function has been fitted successfully to the firing rate of neocortical neurons in the presence of in vivo-like fluctuations (Rauch et al., 2003; Giugliano et al., 2004; La Camera et al., 2006, 2008).

The fixed points of the mean field equations were found with Newton's method (Press et al., 2007). The fixed points can be either stable (attractors) or unstable depending on the eigenvalues λα of the stability matrix

evaluated at the fixed point (Mascaro and Amit, 1999). If all eigenvalues have negative real part, the fixed point is stable (attractor). If at least one eigenvalue has positive real part, the fixed point is unstable. Stability is meant with respect to an approximate linearized dynamics of the mean and variance of the input current:

where μα and are the stationary values for fixed given earlier. For fast synaptic dynamics in the asynchronous balanced regime, these rate dynamics are in very good agreement with simulations (La Camera et al., 2004—see Renart et al., 2004; Giugliano et al., 2008 for more detailed discussions).

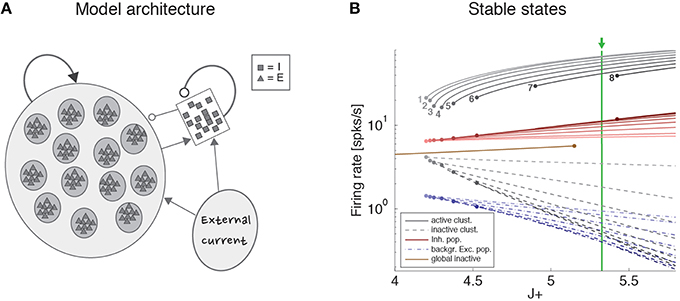

Metastable Configurations in the Network Model

The stable configurations of a network with an infinite number of neurons were obtained in the mean field approximation of the previous section and are shown in Figure 4B for Q = 30 and a range of values of the relative potentiation parameter J+. Above the critical point J+ = 4.2, stable configurations characterized by a finite number of active clusters emerge (gray lines; the number of active clusters is reported next to each line). For a given J+, the firing rate is the same in all active clusters and is inversely proportional to the total number of active clusters. Stable patterns of firing rates are also found in the inhibitory population (red lines), in the inactive clusters (having low firing rates; gray dashed lines), and in the unstructured excitatory population (dashed blue lines). For a fixed value of J+, multiple stable configurations coexist with different numbers of active clusters. For example, for J+ = 5.3, configurations with up to 7 active clusters are stable, each configuration with different firing rates. This generates multistable firing rates in single neurons, i.e., the property, also observed in the data, that single neurons can attain more than 2 firing rates across states (Mazzucato et al., 2015). Note that if J+ ≤ 5.15 an alternative stable configuration of the network with all clusters inactive (firing rates < 10 spikes/s) is also possible (single brown line).

Strictly speaking, the configurations in Figure 4B are stable only in a network containing an infinite number of uncorrelated neurons. In a finite network (or when neurons are strongly correlated) these configurations can lose stability due to strong fluctuations, which ignite transitions among the different configurations. Full details are reported in Mazzucato et al. (2015).

Model Simulations and Analysis of Simulated Data

The dynamical equations of the LIF neurons were integrated with the Euler algorithm with a time step of dt = 0.1 ms. We simulated 20 different networks (referred to as “sessions” in the following) during both ongoing and evoked activity. We chose four different stimuli per session during evoked activity (to mimic taste delivery). Trials were 5 s long. The HMM analyses for Figures 2, 5 were performed on ensembles of randomly selected excitatory neurons with the same procedure used for the data (see previous section “Hidden Markov Model (HMM) analysis”). The ensemble sizes were chosen so as to match the empirical ensemble sizes (3–9 randomly selected neurons). For the analysis of Figure 9A, ensembles of increasing size (from 5 to 100 neurons) were used from simulations with Q = 30 clusters. When the ensemble size was less than the number of clusters (N ≤ Q), each neuron was selected randomly from a different cluster; when ensemble size was larger than the number of clusters, one neuron was added to each cluster until all clusters were represented, and so on until all N neurons had been chosen. To allow comparison with surrogate Poisson spike trains, the dimensionality of the simulated data was computed from the firing rate vectors in T = 200 ms bins as explained in section “Dimensionality measure.” For control, the dimensionality was also computed from the firing rate vectors in hidden states obtained from an HMM analysis, obtaining qualitatively similar results.

Results

Dimensionality of the Neural Activity

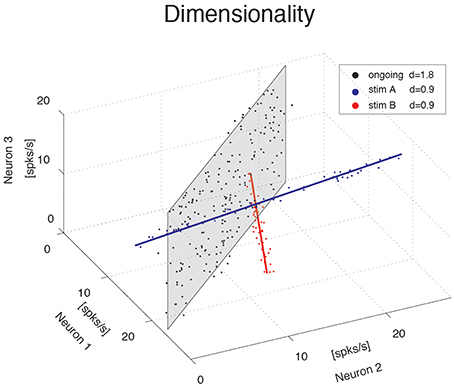

We investigate the dimensionality of sequences of firing rate vectors generated in the GC of alert rats during periods of ongoing or evoked activity (see Methods). To provide an intuitive picture of the meaning of dimensionality adopted in this paper, consider the firing rate vectors from N simultaneously recorded neurons. These vectors can occupy, a priori, the entire N-dimensional vector space minimally required to describe the population activity of N independent neurons. However, the sequence of firing rate vectors generated by the neural dynamics may occupy a subspace that is spanned by a smaller number m < N of coordinate axes. For example, the data obtained by the ensemble of three simulated spike counts in Figure 1 mostly lie on a 2D space, the plane shaded in gray. Although 3 coordinates are still required to specify all data points, a reduced representation of the data, such as that obtained from PCA, would quantify the dimension of the relevant subspace as being close to 2. To quantify this fact we use the following definition of dimensionality (Abbott et al., 2011)

where N is the ensemble size and are the normalized eigenvalues of the covariance matrix, each expressing the fraction of the variance explained by the corresponding principal component (see Methods for details). According to this formula, if the first n eigenvalues express each a fraction 1∕n of the variance while the remaining eigenvalues vanish, the dimensionality is d = n. In less symmetric situations, d reflects roughly the dimension of the linear subspace explaining most variance about all data points. In the example of the data on the gray plane of Figure 1, d = 1.8, which is close to 2, as expected. Similarly, data points lying mostly along the blue and red straight lines in Figure 1 have a dimensionality of 0.9, close to 1. In all cases, d > 0 and d ≤ N, where N is the ensemble size.

Figure 1. Dimensionality of the neural representation. Pictorial representation of the firing rate activity of an ensemble of N = 3 neurons. Each dot represents a three-dimensional vector of ensemble firing rates in one trial. Ensemble ongoing activity localizes around a plane (black dots cloud surrounding the shaded black plane), yielding a dimensionality of d = 1.8. Activity evoked by each of two different stimuli localizes around a line (red and blue dots clouds and lines), yielding a dimensionality of d = 0.9 in both cases.

The blue and red data points in Figure 1 were obtained from a fictitious scenario where neuron 1 and neuron 2 were selective to surrogate stimuli A and B, respectively, and are meant to mimic two possible evoked responses. The subspace containing responses to both stimuli A and B would have a dimensionality dA + B = 1.7, similar to the dimensionality of the data points distributed on the gray plane (meant instead to represent spike counts during ongoing activity in the same fictitious scenario). Thus, a dimensionality close to 2 could originate from different patterns of activity, such as occupying a plane or two straight lines. Other and more complex scenarios are, of course, possible. In general, the dimensionality will reflect existing functional relationships among ensemble neurons (such as pair-wise correlations) as well as the response properties of the same neurons to external stimuli. The pictorial example of Figure 1 caricatures a stimulus-induced reduction of dimensionality, as found in the activity of simultaneously recorded neurons from the GC of alert rats, as we show next.

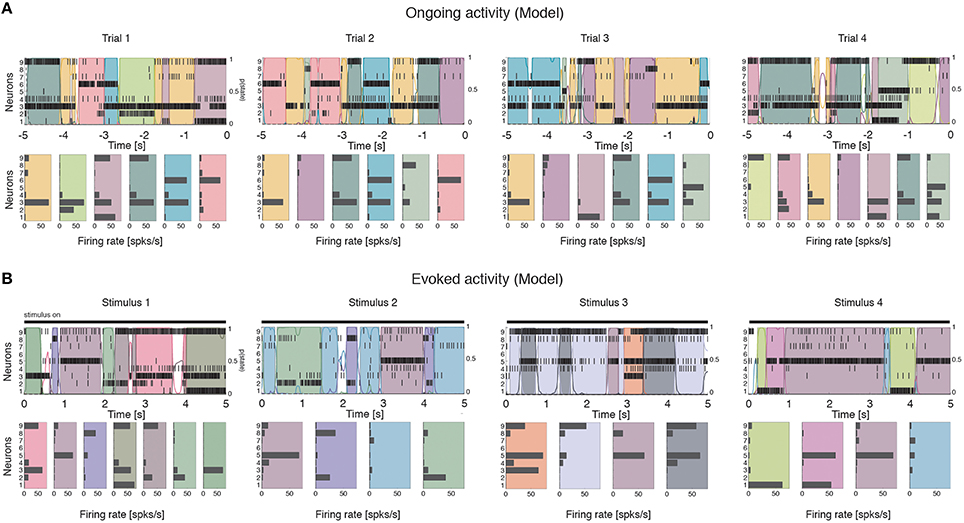

Dimensionality is Proportional to Ensemble Size

We computed the dimensionality of the neural activity of ensembles of 3–9 simultaneously recorded neurons in the gustatory cortex of alert rats during the 5 s inter-trial period preceding (ongoing activity) and following (evoked activity) the delivery of a taste stimulus (said to occur at time t = 0; see Methods). Ensemble activity in single trials during both ongoing (Figure 2A) and evoked activity (Figure 2B) could be characterized in terms of sequences of metastable states, where each state is defined as a collection of firing rates across simultaneously recorded neurons (Jones et al., 2007; Mazzucato et al., 2015). Transitions between consecutive states were detected via a Hidden Markov Model (HMM) analysis, which provides the probability that the network is in a certain state at every 1 ms bin (Figure 2, color-coded lines superimposed to raster plots). The ensemble of spike trains was considered to be in a given state if the posterior probability of being in that state exceeded 80% in at least 50 consecutive 1-ms bins (Figure 2, color-coded shaded areas). Transitions among states were triggered by the co-modulation of a variable number of ensemble neurons and occurred at seemingly random times (Mazzucato et al., 2015). For this reason, the dimensionality of the neural activity was computed based on the firing rate vectors in each HMM state (one firing rate vector per state per trial; see Methods for details).

Figure 2. Ensemble neural activity is characterized by sequences of states. (A) Upper panels: Representative trials from one ensemble of nine simultaneously recorded neurons during ongoing activity, segmented according to their ensemble states (HMM analysis, thin black vertical lines are action potentials; states are color-coded; smooth colored lines represent the probability for each state; shaded colored areas indicate intervals where the probability of a state exceeds 80%). Lower panels: Average firing rates across simultaneously recorded neurons (states are color-coded as in the upper panels). In total, 6 hidden states were found in this example session (only 5 states shown). X-axis for population rasters: time preceding the next event at (0 = stimulus delivery); Y-axis for population rasters: left, ensemble neuron index, right, probability of HMM states; X-axis for average firing rates panels: firing rates (spks/s); Y-axis for firing rate panels: ensemble neuron index. (B) Ensemble rasters and firing rates during evoked activity for four different tastes delivered at t = 0: sucrose, sodium chloride, citric acid, and quinine (notations as in panel A). In total, eight hidden states were found in this session during evoked activity.

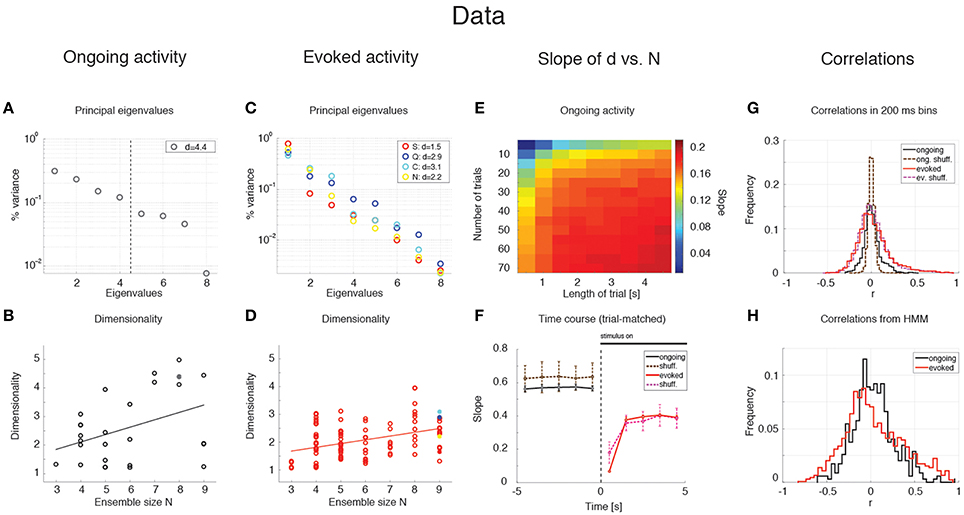

The average dimensionality of ongoing activity across sessions was dongoing = 2.6 ± 1.2 (mean ± SD; range: [1.2, 5.0]; 27 sessions). An example of the eigenvalues for a representative ensemble of eight neurons is shown in Figure 3A, where d = 4.42. The dimensionality of ongoing activity was approximately linearly related to ensemble size (Figure 3B, linear regression, r = 0.4, slope bongoing = 0.26 ± 0.12, p = 0.04). During evoked activity dimensionality did not differ across stimuli (one-way ANOVA, no significant difference across tastants, p > 0.8), hence all evoked data points were combined for further analysis. An example of the eigenvalue distribution of the ensemble in Figure 2B is shown in Figure 3C, where devoked = 1.3 ~ 1.7 across 4 different taste stimuli. Across all sessions, dimensionality was overall smaller (devoked = 2.0 ± 0.6, mean ± SD, range: [1.1, 3.9]) and had a reduced slope as a function of N compared to ongoing activity (Figure 3D, linear regression, r = 0.39, slope bevoked = 0.13 ± 0.03, p < 10−4). However, since dimensionality depends on the number and duration of the trials used for its estimation (Figure 3E), a proper comparison requires matching trial number and duration for each data point, as described next.

Figure 3. Dependence of dimensionality on ensemble size (data). (A) Fraction of variance explained by each principal eigenvalue for an ensemble of 8 neurons during ongoing activity (corresponding to the filled dot in panel B) in the empirical dataset. The dashed vertical line represents the value of the dimensionality for this ensemble (d = 4.4). X-axis, eigenvalue number; Y-axis, fraction of variance explained by each eigenvalue. (B) Dimensionality of neural activity across all ensembles in the empirical dataset during ongoing activity (circles, linear regression fit, d = b · N + a, b = 0.26 ± 0.12, a = 1.07 ± 0.74, r = 0.4), estimated from HMM firing rate fits on all ongoing trials in each session (varying from 73 to 129). X-axis: ensemble size; Y-axis: dimensionality. (C) Fraction of variance explained by each principal eigenvalue for the ensemble in (A) during evoked activity. Principal eigenvalues for sucrose (S, orange), sodium chloride (N, yellow), citric acid (C, cyan), and quinine (Q, blue) are presented (corresponding to the color-coded dots in panel D). X-axis, eigenvalue number; Y-axis, percentage of variance explained by each eigenvalue. (D) Dimensionality of neural activity across all ensembles in the empirical dataset during evoked activity (notations as in panel B, linear regression: d = b · N + a, b = 0.13 ± 0.03, a = 1.27 ± 0.19, r = 0.39), estimated from HMM firing rate fits on evoked trials in each condition (varying from 7 to 11 trials across sessions for each tastant). (E) The slope of the linear regression of dimensionality (d) vs. ensemble size (N) as a function of the length of the trial interval and the number of trials used to estimate the dimensionality. X-axis, length of trial interval [s]; Y-axis, number of trials. (F) Time course of the trial-matched slopes of d vs. N, evaluated with 200 ms bins in consecutive 1 s intervals during ongoing (black curve, t < 0) and evoked periods (red curve, t > 0; error bars represent SD). A significant time course is triggered by stimulus presentation (see Results for details). The slopes of the empirical dataset (thick curves) were smaller than the slope of the shuffled dataset (dashed curves) during ongoing activity. X-axis, time from stimulus onset at t = 0 [s]; Y-axis, slope of d vs. N. (G) Distribution of pair-wise correlations in simultaneously recorded ensembles (black and red histograms for ongoing and evoked activity, respectively) and shuffled ensembles (brown and pink dashed histograms for ongoing and evoked activity, respectively) from 200 ms bins. X-axis, correlation; Y-axis, frequency. (H) Distribution of pair-wise correlations from HMM states during ongoing (black) and evoked activity (red) for all simultaneously recorded pairs of neurons. X-axis, correlation; Y-axis, frequency.

Stimulus-Induced Reduction of Dimensionality

We matched the number and duration of the trials for each data point and ran a two-way ANOVA with condition (ongoing vs. evoked) and ensemble size as factors. Both the main dimensionality [F(1, 202) = 11.93, p < 0.001] and the slope were significantly smaller during evoked activity [test of interaction, ]. There was also a significant effect of ensemble size [], confirming the results obtained with the separate regression analyses. These results suggest that stimuli induce a reduction of the effective space visited by the firing rate vector during evoked activity. This was confirmed by a paired sample analysis of the individual dimensionalities across all 27 × 4=108 ensembles (27 ensemble times 4 gustatory stimuli; p < 0.002, Wilcoxon signed-rank test).

Dimensionality is Larger in Ensembles of Independent Neurons

The dimensionality depends on the pair-wise correlations of simultaneously recorded neurons. Shuffling neurons across ensembles would destroy the correlations (beyond those expected by chance), and would give a measure of how different the dimensionality of our datasets would be compared to sets of independent neurons. We measured the dimensionality of surrogate datasets obtained by shuffling neurons across sessions; because shuffling destroys the structure of the hidden states, firing rates in bins of fixed duration (200 ms) were used to estimate the dimensionality (see Methods for details). As expected, the slope of d vs. N was larger in the shuffled datasets compared to the simultaneously recorded ensembles (not shown) during both ongoing activity (bshuff = 0.67 ± 0.06 vs. bdata = 0.60 ± 0.01; mean ± SD, Mann-Whitney test, p < 0.001, 20 bootstraps), and evoked activity (bshuff = 0.36 ± 0.07 vs. bdata = 0.29 ± 0.01; p < 0.001). Especially during ongoing activity, this result was accompanied by a narrower distribution of pair-wise correlations in the shuffled datasets compared to the simultaneously recorded datasets (Figure 3G), and is consistent with an inverse relationship between dimensionality and pair-wise correlations (see Equation 9).

Time Course of Dimensionality as a Function of Ensemble Size

Unlike ongoing activity, the dependence of dimensionality on ensemble size (the slope of the linear regression of d vs. N) was modulated during different epochs of the post-stimulus period [Figure 3F, full lines; two-way ANOVA; main effect of time F(4, 495) = 3.80, p < 0.005; interaction time x condition: F(4, 495) = 4.76, p < 0.001]. In particular, the dependence of d on the ensemble size N almost disappeared immediately after stimulus presentation in the simultaneously recorded, but not in the shuffled ensembles (trial-matched slope in the first evoked second: bevoked = 0.07 ± 0.01 vs bshuff = 0.19 ± 0.07) and converged to a stable value after approximately 1 second (slope after the first second bevoked = 0.38 ± 0.01; compare with a stable average slope during ongoing activity of bongoing = 0.57 ± 0.01, Figure 3F).

Note that the dimensionality is larger when the firing rate is computed in bins (as in Figure 3F) rather than in HMM states (as in Figures 3B–D, where the slopes are about half than in Figure 3F). The reason is that firing rates and correlations are approximately constant during the same HMM state, whereas they may change when estimated in bins of fixed duration that include transitions among hidden states. These changes tend to dilute the correlations resulting in higher dimensionality as predicted e.g., by Equation (9). A comparison of the pair-wise correlations of binned firing rates (Figure 3G) vs. those of firing rates in HMM states (Figure 3H) confirmed this hypothesis. Also, if the argument above is correct, one would expect a dependence of dimensionality on (fixed) bin duration. We computed the correlations and dimensionality of binned firing rates for various bin durations and found that r increases and d decreases for increasing bin durations (not shown). However, the slope of d vs. N is always larger in ongoing than in evoked activity regardless of bin size (ranging from 10 ms to 5 s; not shown). This confirms the generality of the results of Figures 3B–D, which were obtained using firing rate vectors in hidden states.

To summarize our main results so far, we found that dimensionality depends on ensemble size during both ongoing and evoked activity, and such dependence is significantly reduced in the post-stimulus period. This suggests that while state sequences during ongoing activity explore a large portion of the available firing rate space, the presentation of a stimulus initially collapses the state sequence along a more stereotyped and lower-dimensional response (Katz et al., 2001; Jezzini et al., 2013). During both ongoing and evoked activity, the dimensionality is also different than expected by chance in a set of independent neurons (shuffled datasets).

Clustered Spiking Network Model of Dimensionality

To gain a mechanistic understanding of the different dimensionality of ongoing and evoked activity, we have analyzed a spiking network model with clustered connectivity which has been shown to capture many essential features of the data (Mazzucato et al., 2015). In particular, the model reproduces the transitions among latent states in both ongoing and evoked activity. The network (see Methods for details) comprises Q clusters of excitatory neurons characterized by stronger synaptic connections within each cluster and weaker connections between neurons in different clusters. All neurons receive recurrent input from a pool of inhibitory neurons that keeps the network in a balanced regime of excitation and inhibition in the absence of external stimulation (Figure 4A). In very large networks (technically, in networks with an infinite number of neurons), the stable configurations of the neural activity are characterized by a finite number of active clusters whose firing rates depend on the number clusters active at any given moment, as shown in Figure 4B (where Q = 30). In a finite network, however, finite size effects ignite transitions among these configurations, inducing network states (firing rate vectors) on randomly chosen subsets of neurons that resemble the HMM states found in the data (Figure 5; see Mazzucato et al., 2015 for details).

Figure 4. Recurrent network model. (A) Schematic recurrent network architecture. Triangles and squares represent excitatory and inhibitory LIF neurons respectively. Darker disks indicate excitatory clusters with potentiated intra-cluster synaptic weights. (B) Mean field solution of the recurrent network. Firing rates of the stable states for each subpopulation are shown as function of the intra-cluster synaptic potentiation parameter J+: firing rate activity in the active clusters (solid gray lines), firing rate in the inactive clusters (dashed gray lines), activity of the background excitatory population (dashed blue lines), activity of the inhibitory population (solid red lines). In each case, darker colors represent configurations with larger number of active clusters. Numbers denote how many clusters are active in each stable configuration. Configurations with 1–8 active clusters are stable in the limit of infinite network size. A global configuration where all clusters are inactive (brown line) becomes unstable at the value J+ = 5.15. The vertical green line represents the value of J+ = 5.3 chosen for the simulations. X-axis, intra-cluster potentiation parameter J+ in units of JEE; Y-axis, Firing rate (spks/s).

Figure 5. Ensemble activity in the recurrent network model is characterized by sequences of states. Representative trials from one ensemble of nine simultaneously recorded neurons sampled from the recurrent network, segmented according to their ensemble states (notations as in Figure 1). (A) ongoing activity. (B) Ensemble activity evoked by four different stimuli, modeled as an increase in the external current to stimulus-selective clusters (see Methods for details).

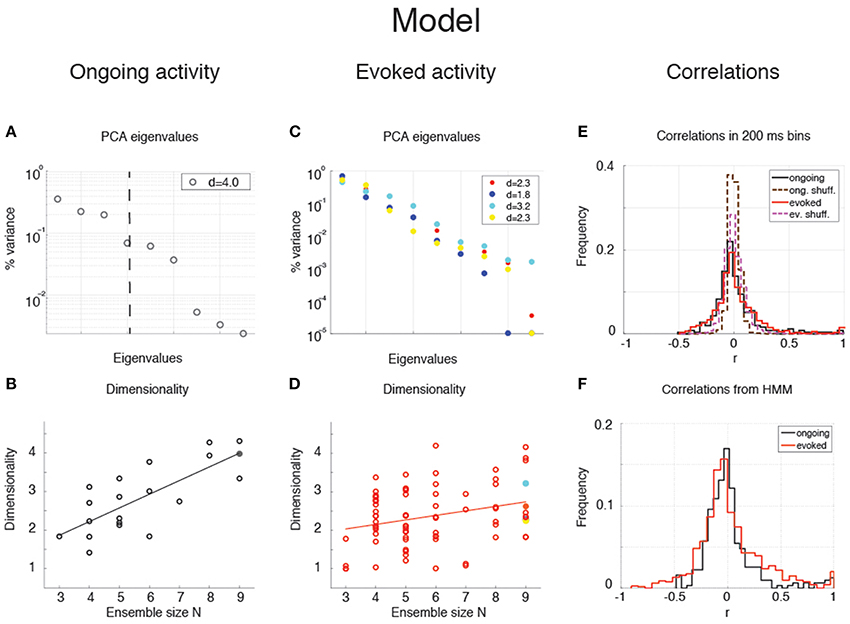

The dimensionality of the simulated sequences during ongoing and evoked activity was computed as done for the data, finding similar results. For the examples in Figure 5, we found dongoing = 4.0 for ongoing activity (Figure 6A) between devoked = 2.2 and devoked = 3.2 across tastes during evoked activity (Figure 6C). Across all simulated sessions, we found an average dongoing = 2.9 ± 0.9 (mean ± SD) for ongoing activity and devoked = 2.4 ± 0.7 for evoked activity. The model captured the essential properties of dimensionality observed in the data: the dimensionality did not differ across different tastes (one-way ANOVA, p > 0.2) and depended on ensemble size during both ongoing (Figure 6B; slope = 0.36 ± 0.07, r = 0.77, p < 10−4) and evoked periods (Figure 6D; slope = 0.12 ± 0.04, r = 0.29, p = 0.01). As for the data, the dependency on ensemble size was smaller for evoked compared to ongoing activity. We performed a trial-matched two-way ANOVA as done on the data and found, also in the model, a main effect of condition [ongoing vs. evoked: ], a main effect of ensemble size [], and a significant interaction [F(6, 146) = 3.8, p = 0.001]. These results were accompanied by patterns of correlations among the model neurons (Figures 6E,F) very similar to those found in the data (Figures 3G,H; see section “Dimensionality is larger in the presence of clusters” for statistics of correlation values). As in the data, narrower distributions of correlations were found for binned firing rates (Figure 6E) compared to firing rates in hidden states (Figure 6F; compare with Figures 3G,H, respectively). Moreover, shuffling neurons across datasets reduced the correlations (Figure 6E, dashed), resulting in a larger slope of d vs. N (not shown). Finally, d during ongoing activity was always larger than during evoked activity also when computed on binned firing rates (not shown), as found in the data (see section “Dependence of dimensionality on bin size”).

Figure 6. Dependence of dimensionality on ensemble size (model). (A) Fraction of variance explained by each principal eigenvalue for an ensemble of 9 neurons during ongoing activity (corresponding to the filled dot in panel B) in the model network of Figure 5 (notations as in Figure 3A). (B) Dimensionality of neural activity across all ensembles in the model during ongoing activity (linear regression fit, d = b · N + a, b = 0.36 ± 0.07, a = 0.80 ± 0.43, r = 0.77), estimated from HMM firing rate fits. X-axis, ensemble size; Y-axis, dimensionality. (C) Fraction of variance explained by each principal eigenvalue for the ensemble in panel A during evoked activity. Principal eigenvalues for four tastes are presented (corresponding to the color-coded dots in panel D). X-axis, eigenvalue number; Y-axis, percentage of variance explained by each eigenvalue. (D) Dimensionality of neural activity across all ensembles in the model during evoked activity (notations as in panel B, linear regression: d = b · N + a, b = 0.12 ± 0.04, a = 1.70 ± 0.26, r = 0.29). (E) Distribution of pair-wise correlations in simultaneously recorded ensembles from the clustered network model (black and red histograms for ongoing and evoked activity, respectively) and in shuffled ensembles (brown and pink dashed histograms for ongoing and evoked activity, respectively) from 200 ms bins. X-axis, correlation; Y-axis, frequency. (F) Distribution of pair-wise correlations from HMM states during ongoing (black) and evoked activity (red) for all simultaneously recorded pairs of neurons. X-axis, correlation; Y-axis, frequency.

Since the model was not fine-tuned to find these results, the different dimensionalities of ongoing and evoked activity, and their associated patterns of pair-wise correlations, are likely the consequence of the organization in clusters and of the ensuing dynamics during ongoing and evoked activity.

Scaling of Dimensionality with Ensemble Size and Pair-Wise Correlations

The dependence of dimensionality on ensemble size observed in the data (Figure 3B) and in the model (Figure 6B) raises the question of whether or not the dimensionality would converge to an upper bound as one increases the number of simultaneously recorded neurons. In general, this question is important in a number of settings, related e.g., to coding in motor cortex (Ganguli et al., 2008; Gao and Ganguli, 2015), performance in a discrimination task (Rigotti et al., 2013), or coding of visual stimuli (Cadieu et al., 2013). We can attack this question aided by the model of Figure 4, where we can study the effect of large numbers of neurons, but also the impact on dimensionality of a clustered network architecture compared to a homogeneous one, at parity of correlations and ensemble size.

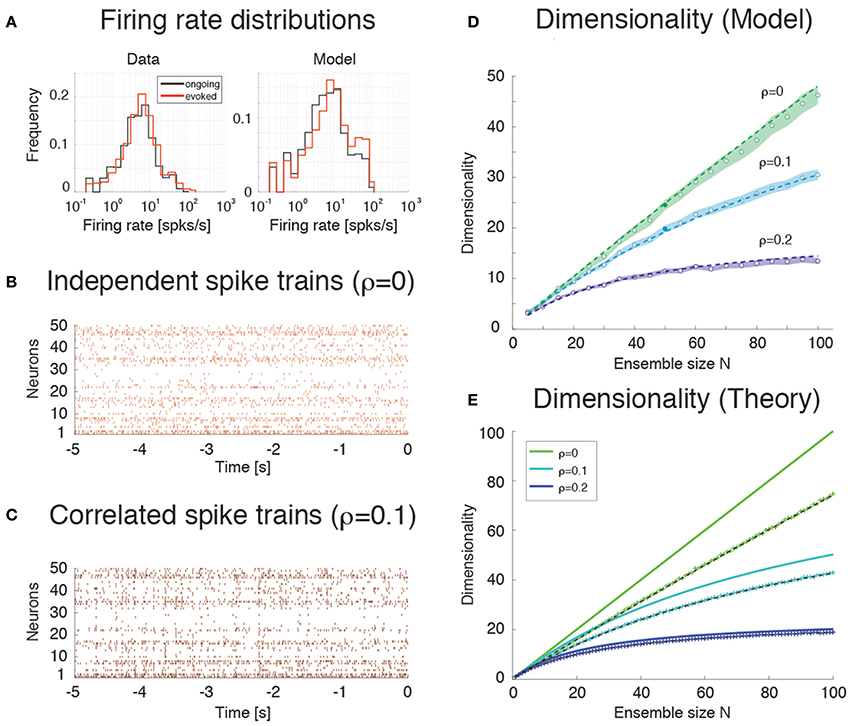

We consider first the case of a homogeneous network of neurons having no clusters and low pair-wise correlations, but having the same firing rates distributions (which were approximately log-normal, Figure 7A) and the same mean pair-wise correlations as found in the data (ρ~0.01 − 0.2). This would require solving a homogeneous recurrent network self-consistently for the desired firing rates and correlations. As a proxy for this scenario, we generated 20 sessions of 40 Poisson spike trains having exactly the desired properties (including the case of independent neurons for which ρ = 0). Two examples with ρ = 0 and ρ = 0.1, respectively, are shown in Figures 7B,C. Since in the asynchronous homogeneous network there are no transitions and hence no hidden states, the dimensionality was estimated based on the rate vectors in bins of 200 ms duration (using bin widths of 50–500 ms did not change the results; see Methods for details).

Figure 7. Dimensionality and correlation. (A) Empirical single neuron firing rate distributions in the data (left) and in the model (right), for ongoing (black), and evoked activity (red). The distributions are approximately lognormal. X-axis, Firing rate (spks/s); Y-axis, frequency. (B) Example of independent Poisson spike trains with firing rates matched to the firing rates obtained in simulations of the spiking network model. (C) Example of correlated Poisson spike trains with firing rates matched to the firing rates obtained in simulations of the spiking network model. Pair-wise correlations of ρ = 0.1 were used (see Methods). X-axis, time [s]; Y-axis, neuron index. (D) Dimensionality as a function of ensemble size N in an ensemble of Poisson spike trains with spike count correlations ρ = 0, 0.1, 0.2 and firing rates matched to the model simulations of Figure 6. Dashed lines represent the fit of Equation (16) to the data (with δρ2 = αρ2, σ4 = δσ4 = β), with best-fit parameters (mean ± s.e.m.) α = 0.22 ± 10−5, β = 340 ± 8. Filled circles (from top to bottom): dimensionality of the data (raster plots) shown in (B,C) (shaded areas represent SD). X-axis, ensemble size; Y-axis, dimensionality. (E) Theoretical prediction for the dependence of dimensionality on ensemble size N and firing rate correlation ρ for the case of uniform correlation, Equation (8) (thick lines; green to cyan to blue shades represent increasing correlations). “+” are dimensionality estimates from NT = 1, 000 trials for each N (same NT as in panel D, each trial providing a firing rate value sampled from a log-normal distribution), in the case of log-normally distributed firing rate variances with mean σ2 = 40 (spk∕s)∧2 and standard deviation 0.5 σ2. Theoretical predictions from Equation (16) match the estimated values in all cases (dashed black lines). X-axis, ensemble size N; Y-axis, dimensionality.

We found that the dimensionality grows linearly with ensemble size in the absence of correlations, but is a concave function of N in the presence of pair-wise correlations (circles in Figure 7D). Thus, as expected, the presence of correlations reduces the dimensionality and suggests the possibility of an upper bound. A simple theoretical calculation mimicking this scenario shows that d in this case converges indeed to an upper bound that depends on the inverse of the square of the pair-wise correlations. For example, in the case of uniform correlations (ρ) and equal variances of the spike counts, Equation (8) of Methods, , shows that d = N in the absence of correlations, but d < 1 ∕ ρ2 in the presence of correlations. These properties remain approximately true if the variances of the firing rates are drawn from a distribution with mean and variance . As Equation (9) shows, in such a case dimensionality is reduced compared to the case of equal variances, for example for large N when ρ = 0, δρ = 0.

The analytical results are shown in Figure 7E (full lines correspond to Equation 8), together with their estimates (“+”) based on 1000 data points (same number as trials in Figure 7D; see Methods). The estimates are based on surrogate datasets with lognormal-distributed variances to mimic the empirical distribution of variances found in GC (not shown).

Estimation Bias

Comparison of Figures 7D,E shows that the dimensionality of the homogeneous network is underestimated compared to the theoretical value given by Equation (8). This is due to a finite number of trials and the presence of unequal variances with spread δσ4 (“+” in Figure 7E). As Figure 7E shows, taking this into account will reduce the dimensionality to values comparable to those of the homogeneous network of Figure 7D. The dimensionality in that case is well predicted by Equation (16) (broken lines in Figure 7E). The same Equation (16) was fitted successfully to the data in Figure 7D (dashed) by tuning 2 parameters to account for the unknown variance and correlation width of the firing rates (see Methods for details).

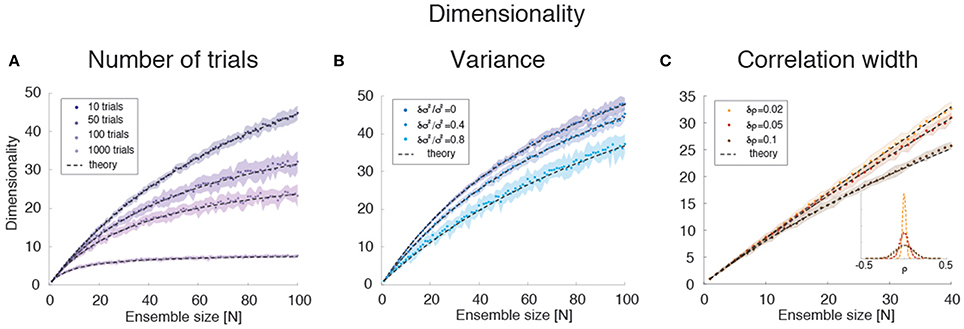

Empirically, estimates of the dimensionality Equation (2) based on a finite number NT of trials tend to underestimate d (Figure 3E). The approximate estimator Equation (16) confirms that, for any ensemble size N, d is a monotonically increasing function of the number of trials (Figure 8A). Note that this holds for the mean value of the estimator (Equation 16) over many datasets, not for single estimates, which could overestimate the true d (not shown). Equation (16) also provides an excellent description of dimensionality as a function of firing rates' variance δσ4 (Figure 8B) and pair-wise correlations width δρ2 (Figure 8C). In particular, the mean and the variance of the pair-wise correlations have an interchangeable effect on d (see Equation 16); they both decrease the dimensionality and so does the firing rate variance δσ4 (Figure 8B).

Figure 8. Dimensionality estimation. (A) Dependence of dimensionality on the number of trials for variable ensemble size N, for fixed correlations ρ = 0.1 and firing rates variances with mean σ2 and standard deviation δσ2 = 0.4 σ2. Dashed lines: theoretical prediction, Equation (16); dots: mean values from simulations of 20 surrogate datasets containing 10–1000 trials each (shaded areas: SD), with darker shades representing increasing number of trials. X-axis: ensemble size; Y-axis, dimensionality. (B) Dependence of dimensionality on the spread δσ2 of the firing rates variances for fixed correlations ρ = 0.1 and firing rate variance with mean σ2. Dashed lines: theoretical prediction, Equation (16); dots: mean values from simulations of 20 surrogate datasets containing 1000 trial each (shaded areas: SD), with lighter shades representing increasing values of δσ2 ∕ σ2). X-axis, ensemble size; Y-axis, dimensionality. (C) Dependence of dimensionality on the width of pair-wise firing rate correlations (with zero mean, ρ = 0), for firing rates variances with mean σ2 and standard deviation δσ2 = 0.4 σ2. Dashed lines: theoretical prediction, Equation (16); dots: mean values from simulations of 20 surrogate datasets containing 1000 trials each (shaded areas: SD), with darker shades representing increasing values of δρ. Inset: distribution of correlation coefficients used in the main figure. X-axis, ensemble size; Y-axis, dimensionality. In all panels, σ2 = 40 (spk∕s)∧2.

Scaling of Dimensionality in the Presence of Clusters

We next compared the dimensionality of the homogeneous network's activity to that predicted by the clustered network model of Figure 4. To allow comparison with the homogeneous network, dimensionality was computed based on the spike counts in 200 ms bins rather than the HMM's firing rate vectors as in Figure 6 (see Methods for details).

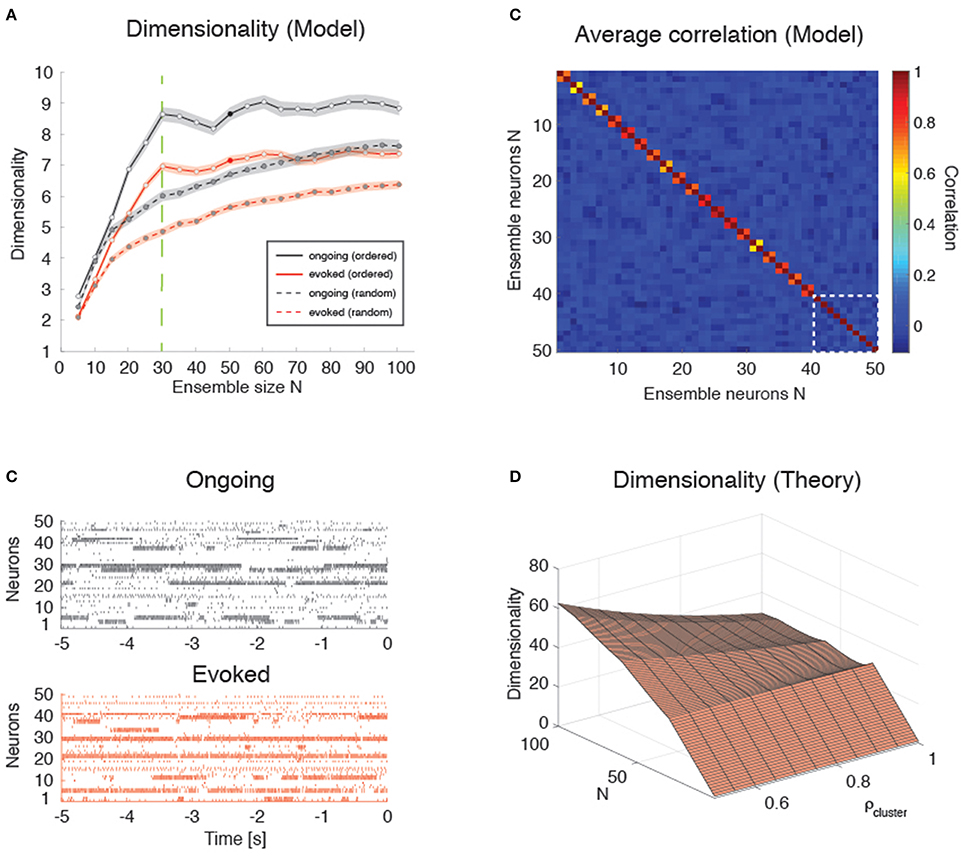

We found that the dependence of d on N in the clustered network depends on how the neurons are sampled. If the sampling is completely random, so that any neuron has the same probability of being added to the ensemble regardless of cluster membership, a concave dependence on N will appear, much like the case of the homogeneous network (Figure 9A, dashed lines). However, if neurons are selected one from each cluster until all clusters have been sampled once, then one neuron from each cluster until all clusters have been sampled twice, and so on, until all the neurons in the network have been sampled, then the dependence of d on N shows an abrupt transition when N = Q, i.e., when the number of sampled neurons reaches the number of clusters in the network (Figure 9A, full lines; see Figure 9B for raster plots with Q = 30 and N = 50). In the following, we refer to this sampling procedure as “ordered sampling,” as a reminder that neurons are selected randomly from each cluster, but the clusters are selected in serial order. For N ≤ Q, the dimensionality grows linearly with ensemble size in both ongoing (slope 0.24 ± 0.01, r = 0.79, p < 10−10, black line) and evoked periods (slope 0.19 ± 0.01, r = 0.84, p < 10−10; red line), and was larger during ongoing than evoked activity [trial-matched two-way ANOVA, main effect: ; interaction: F(5, 948) = 4.1, p < 0.001].

Figure 9. Dimensionality in a clustered network. (A) Trial-matched dimensionality as a function of ensemble size in the recurrent network model (ongoing and evoked activity in black and red, respectively, with shaded areas representing s.e.m.). Filled lines represent ordered sampling, where ensembles to the left of the green vertical line (N = Q = 30) contain at most one neuron per cluster, while to the right of the line they contain one or more neurons from all clusters (filled circles indicate representative trials in panel B). Dashed lines represent random sampling of neurons, regardless of cluster membership. X-axis, ensemble size; Y-axis, dimensionality. (B) Representative trial of an ensemble of 50 neurons sampled from the recurrent network in Figure 4 during ongoing activity (upper plot, in black) or evoked activity (lower plot, in red) for the case of “ordered sampling” (full lines in panel A). Neurons are sorted according to their cluster membership (adjacent neuron pairs with similar activity belong to the same cluster, for neurons #1 up to #40; the last 10 neurons are sampled from the remaining clusters). X-axis, time to stimulus presentation at t = 0 (s); Y-axis, neuron index. (C) Average correlation matrix for 20 ensembles of N = 50 neurons from the clustered network model with Q = 30 clusters. For the first 40 neurons, adjacent pairs belong to the same cluster; the last 10 neurons (delimited by a dashed white square) belong to the remaining clusters (neurons are ordered as in panel B). Thus, neurons 1, 3, 5, …, 39 (20 neurons) belong to the first 20 clusters; neurons 2, 4, 6, …, 40 (20 neurons) belong also the first 20 clusters; and neurons 41, 42, 43, …, 50 (10 neurons) belong to the remaining 10 clusters. X-axis, Y-axis: neuron index. (D) Plot of Equation (12) giving d vs. N and ρ (uniform within-cluster correlations) for the sampling procedure of panel (B). X-axis, ensemble size N; Y-axis, dimensionality.

These results are in keeping with the empirical and model results based on the HMM analysis (Figures 3, 6). However, in the case of ordered sampling, the dependence of dimensionality on ensemble size tends to disappear for N ≥ Q both during ongoing (slope 0.010 ± 0.003, r = 0.1, p < 0.001) and evoked periods (slope 0.009 ± 0.002, r = 0.13, p < 10−4; Figure 9A, full lines). The average dimensionality over the range 30 ≤ N ≤ 100 was significantly larger for ongoing, dongoing = 8.74 ± 0.06, than for evoked activity, devoked = 7.15 ± 0.04 [trial-matched two-way ANOVA, main effect: ], confirming that dimensionality during ongoing is larger than during evoked activity also in this case. The difference in dimensionality between ongoing and evoked activity also holds in the case of random sampling on the entire range of N values (Figure 9A, dashed lines), confirming the generality of this finding.

Dimensionality is Larger in the Presence of Clusters

Intuitively, the dimensionality saturates at N = Q in the clustered network because additional neurons will be highly correlated with already sampled ones. For N ≤ Q, each new neuron's activity adds an independent degree of freedom to the neural dynamics and thus increases its dimensionality. For Q > N, additional neurons are highly correlated with an existing neuron, adding little or no additional contribution to d. Indeed, compared to the low overall correlations found across all neuron pairs in the data (and used as desiderata for the homogeneous network), neurons belonging to the same model cluster had a much higher correlation of ρ = 0.92 [0.56, 0.96] (median and [25, 75]-percentile), while neurons belonging to different clusters had negligible correlation (ρ ≈ 0, [−0.10, 0.06]). A negligible median correlation was typical: for example, negligible was the overall median correlation regardless of cluster membership (ρ ≈ 0 [−0.109, 0.083]); and the empirical correlation both during ongoing ([−0.047, 0.051], with rare maximal values of ρ ~ 0.5), and evoked activity ([−0.085, 0.113], with rare maximal values of ρ ~ 0.9). While we note the qualitative agreement of model and empirical correlations, we emphasize that these numbers were obtained using 200 ms bins and that they were quite sensitive to bin duration. In particular, the maximal correlations (regardless of sign) were substantially reduced for smaller bin durations (not shown).

Plugging these values into a correlation matrix reflecting the clustered architecture and the “ordered” sampling procedure used in Figure 9B, we obtained the matrix shown in Figure 9C, where pairwise correlations depend on whether or not the neurons belong to the same cluster (for the first 40 neurons, adjacent pairs belong to the same cluster; the last 10 neurons belong to the remaining clusters). It is natural to interpret such correlation matrix as the noisy observation of a block-diagonal matrix such that neurons in the same cluster have uniform correlation while neurons from different clusters are uncorrelated. For such a correlation matrix the dimensionality can be evaluated exactly (see Equation 12 of Methods). In the approximation where all neurons have the same variance, this reduces to Equation (13), i.e.,

where N = mQ + p. This formula is plotted in Figure 9D for relevant values of ρ and N and it explains the origin of the abrupt transition in dimensionality at Q = N. (The reasons for a dimensionality lower than N for N ≤ Q in the data–see Figure 9A–are, also in this case, the finite number of data points (250) used for its estimation and the non-uniform distributions of firing rate variances and correlations).

Note that the formula also predicts cusps in dimensionality (which become local maxima for large ρ) whenever the ensemble size is an exact multiple of the number of clusters. This is also visible in the simulated data of Figure 9A, where local maxima seem to appear at N = 30, 60, 90 with Q = 30 clusters. It is also worth mentioning that, for low intra-cluster correlations, the dependence on N predicted by Equation (13) becomes smoother and the cusps harder to detect (not shown), suggesting that the behavior of a clustered network with weak clusters tends to converge to the behavior of a homogeneous asynchronous network—therefore lacking sequences of hidden states. Thus, the complexity of the network dynamics is reflected in how its dimensionality scales with N, assuming that one may sample one neuron per cluster (i.e., via “ordered sampling”).

Even though it is not clear how to perform ordered sampling empirically (see Discussion), this result is nevertheless useful since it represents an upper bound also in the case of random sampling (see Figure 9A, dashed lines). Equation (13) predicts that d ≤ Q∕ρ2, with this value reached asymptotically for large N. In the case of random sampling, growth to this bound is even slower (Figure 9A). For comparison, in a homogeneous network d ≤ 1∕ρ2 from Equation (8), a bound that is smaller by a factor of Q. Finally, homogeneous dimensionality is dominated by clustered dimensionality also in the more realistic case of non-uniform variances and correlations, where similar bounds are found in both cases (see Methods for details).